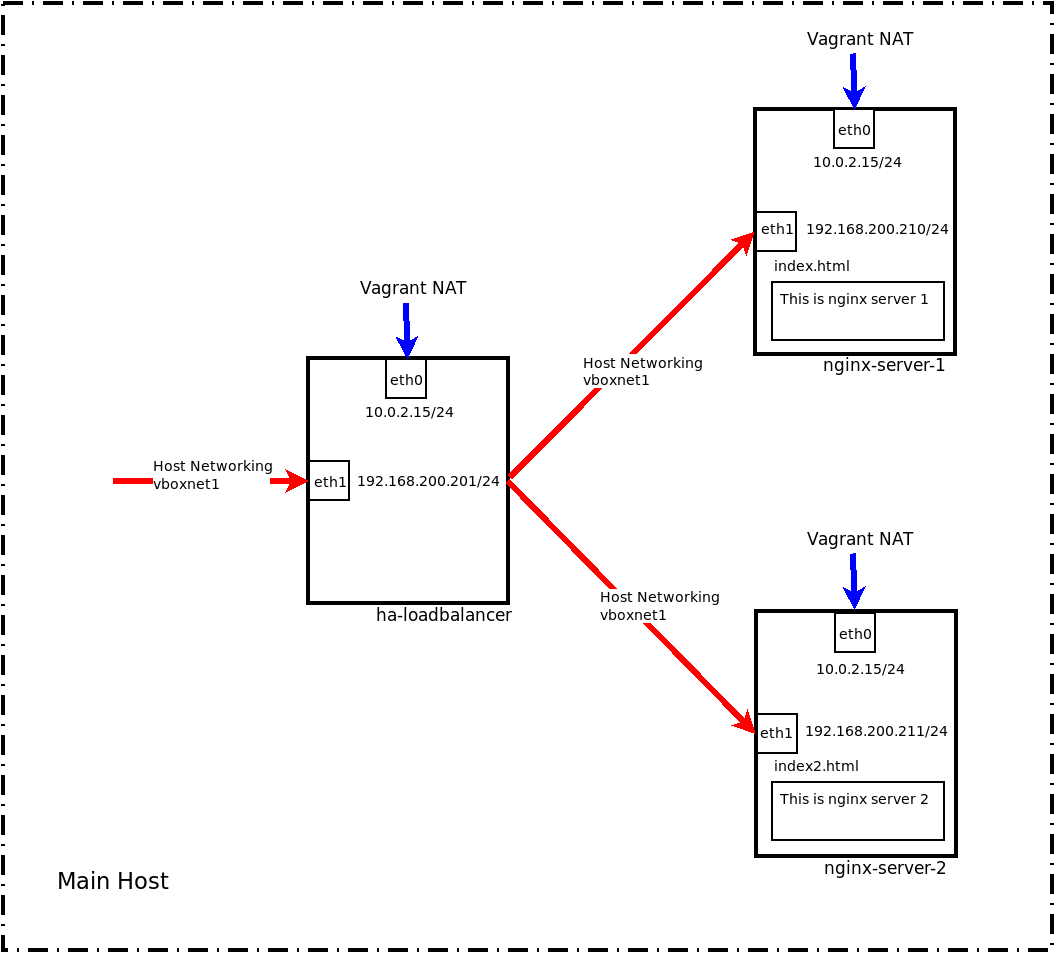

In this post we'll set up a simple Load Balancer, using HAProxy, that will connect two Virtual Machines running NGINX. Each of the backend machines will serve a slightly different page to confirm they are both reachable via the ingress of HAProxy. The lab makes use of Vagrant to define the necessary infrastructure and do the basic provisioning making it possible for it to be run on any suitable Host.

Baseconfiguration

The Lab runs on a Host running VirtualBox to bring up the Virtual Machines. There are two interfaces per Virtual Machine, with the first being used by Vagrant to provision the machines and the 2nd allowing connection between them. As this is a lab the only networking available will be a host only network, only available from the Host machine.

A working directory on the Host has been created called LoadBalancer

All files used can be found at https://github.com/salterje/BasicLoadBalancer.git

LoadBalancer/

├── files

│ ├── haproxy.cfg

│ ├── index2.html

│ └── index.html

├── scripts

│ ├── nginx.sh

│ └── setup.sh

└── Vagrantfile

The important file is the Vagrantfile which contains full details for the Lab. This allows Vagrant to create the Virtual Machines from a base image, known as a Box, and the associated networks. We are also going to copy some files from the Host to the created servers and make use of shell provisioning to install all the necessary packages and start the relevant services.

To make things a little easier two sub directories are created to contain the necessary files that will be copied and the shell scripts that will be run to provision the machines.

The great thing about this arrangement is the ability to move this directory to another Host with Vagrant and VirtualBox and re-create the Lab in exactly the same way, each time.

VagrantFile

The core of the system is the VagrantFile.

# -*- mode: ruby -*-

# vi: set ft=ruby :

Vagrant.configure("2") do |config|

config.vm.box = "centos/7"

config.vm.define "ha-server" do |ha|

ha.vm.provider "virtualbox" do |v|

v.name = "ha-loadbalancer"

v.memory = 1024

v.cpus = 1

v.customize ["modifyvm", :id, "--description", "This is a HA LoadBalancer"]

end

ha.vm.hostname = "ha-loadbalancer"

ha.vm.network "private_network", ip: "192.168.200.201"

ha.vm.provision "file", source: "./files/haproxy.cfg", destination: "/home/vagrant/haproxy.cfg"

ha.vm.provision "shell", path: "scripts/setup.sh"

end

config.vm.define "nginx-server-1" do |server1|

server1.vm.provider "virtualbox" do |v|

v.name = "nginx-server-1"

v.memory = 1024

v.cpus = 1

v.customize ["modifyvm", :id, "--description", "This is an nginx server 1"]

end

server1.vm.hostname = "nginx-server-1"

server1.vm.network "private_network", ip: "192.168.200.210"

server1.vm.provision "file", source: "./files/index.html", destination: "/home/vagrant/index.html"

server1.vm.provision "shell", path: "scripts/nginx.sh"

end

config.vm.define "nginx-server-2" do |server2|

server2.vm.provider "virtualbox" do |v|

v.name = "nginx-server-2"

v.memory = 1024

v.cpus = 1

v.customize ["modifyvm", :id, "--description", "This is an nginx server 2"]

end

server2.vm.hostname = "nginx-server-2"

server2.vm.network "private_network", ip: "192.168.200.211"

server2.vm.provision "file", source: "./files/index2.html", destination: "/home/vagrant/index.html"

server2.vm.provision "shell", path: "scripts/nginx.sh"

end

end

This has a separate section for each Virtual Machine.

config.vm.define "ha-server" do |ha|

ha.vm.provider "virtualbox" do |v| # Define the hypervisor that the config is for, in this case VirtualBox

v.name = "ha-loadbalancer" # The unique name within VirtualBox

v.memory = 1024 # The amount of memory for the Virtual Machine

v.cpus = 1 # CPU allocation within VirtualBox

v.customize ["modifyvm", :id, "--description", "This is a HA LoadBalancer"] # A description within VirtualBox

end

ha.vm.hostname = "ha-loadbalancer" # The actual hostname for the Virtual Machine

ha.vm.network "private_network", ip: "192.168.200.201" # The IP address for the connection to the other Virtual Machines

ha.vm.provision "file", source: "./files/haproxy.cfg", destination: "/home/vagrant/haproxy.cfg" # Copying a file from the Host to the Virtual Machine

ha.vm.provision "shell", path: "scripts/setup.sh" # A shell provisioner and it's location of the shell script that will be used to set things up in the guest

end

Setting Up Two NGINX Servers

The two NGINX servers use CentOS7 as their base operating systems with NGINX on both of them and enabled to run at start-up. Each of the servers serves a simple index.html page to easily be able to prove which server is being hit by the Load Balancer.

The Vagrant script defines the build of the Virtual Machines, setting all parameters including memory, CPU, hostnames and IP addresses. It also uses shell script provisioning to update the created VMs, install the packages, start the services and copy the relevant index.html file.

This will set up the two Virtual Machines to start NGINX and serve a dedicated test page on each server, which will be used to prove the Load Balancer is working.

The provisioning shell script runs 7 tasks to set the machines up.

echo "Task 1: Perform YUM update"

yum -y update

echo "Task 2: Add epel-release"

yum -y install epel-release

echo "Task 3: Install nginx"

yum -y install nginx > /dev/null 2>&1

echo "Taks 4: Modify index.html test page"

sudo cp /home/vagrant/index.html /usr/share/nginx/html/index.html

echo "Task 5: Enable NGINX"

sudo systemctl enable nginx

echo "Task 6: Start NGINX"

sudo systemctl start nginx

echo "Task 7 Update /etc/hosts file"

cat >>/etc/hosts<<EOF

192.168.200.201 ha-loadbalancer

192.168.200.210 nginx-server-1

192.168.200.211 nginx-server-2

EOF

Setting Up HAProxy

The HAProxy will act as a Load Balancer between the two NGINX servers and uses CentOS7 as the base operating system. Most of this is done with the Provisioning script that is used to install the software packages, start the necessary services and in the case of the modified configuration modify the SELinux context as the new haproxy.cfg configuration file has been copied from another location.

The provisioning script runs 8 tasks to bring the HAProxy up.

echo "Task 1: Perform YUM update"

yum -y update

echo "Task 2: Install haproxy"

yum -y install haproxy > /dev/null 2>&1

echo "Task 3: Backup haproxy.cfg"

if [ -f /etc/haproxy/haproxy.cfg.orig ]; then

echo "haproxy.cfg already backed up"

else

echo "Make a copy of file"

sudo cp -v /etc/haproxy/haproxy.cfg /etc/haproxy/haproxy.cfg.orig

fi

echo "Task 4: Changer Permissions of haproxy.cfg"

sudo chown root:root /home/vagrant/haproxy.cfg

echo "Task 5: Move File from Home to /etc"

sudo mv /home/vagrant/haproxy.cfg /etc/haproxy/haproxy.cfg

echo "Task 6: Change SELinux File Type"

sudo chcon -t etc_t /etc/haproxy/haproxy.cfg

echo "Task 7: Start HAProxy"

sudo systemctl start haproxy

sudo systemctl enable haproxy

echo "Task 8 Update /etc/hosts file"

cat >>/etc/hosts<<EOF

192.168.200.201 ha-loadbalancer

192.168.200.210 nginx-server-1

192.168.200.211 nginx-server-2

EOF

A critical step in the provisioing script is the Task 6 to change the context of the haproxy.cfg to allow the haproxy service to be run. If this is not done then SELinux will not allow the service to run.

The actual configuration to allow the load balancing is fairly simple and is just some lines to define the servers that will be load balanced between. These files are added to the original config file at /etc/haproxy/haproxy.cfg (which gets replaced as part of the provisioning)

frontend http_front

bind *:80

stats uri /haproxy?stats

default_backend http_back

backend http_back

balance roundrobin

server nginx-server-1 192.168.200.210:80 check

server nginx-server-2 192.168.200.211:80 check

The file has the frontend section that simply binds any traffic that comes in destined for port 80 and sends it to the default backend. This has a simple even round-robin load balancing between the two servers at 192.168.200.210 and 211.

Starting Up Lab

Once the basic Directory has been created it is a simple matter of running:

vagrant up

This will parse the Vagrantfile that will check if there is already a suitable base image to create the new guest machines. If there isn't a suitable image then one will be pulled down onto the Host and the remaining file will be run.

As part of the build Vagrant will automatically create relevent keys to allow ssh connection to the newly created machines.

Once a guest machine has been created the shell provisioning will be run to copy files onto the guest and pull down all the packages.

The process will take a few minutes if the machines need to be created from scratch.

Once they are created the status can be checked.

vagrant status

Current machine states:

ha-server running (virtualbox)

nginx-server-1 running (virtualbox)

nginx-server-2 running (virtualbox)

This environment represents multiple VMs. The VMs are all listed

above with their current state. For more information about a specific

VM, run `vagrant status NAME`.

If necessary each machine can be connected to via ssh

vagrant ssh ha-server

Last login: Tue Sep 15 13:20:53 2020 from 10.0.2.2

[vagrant@ha-loadbalancer ~]$ ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 52:54:00:4d:77:d3 brd ff:ff:ff:ff:ff:ff

inet 10.0.2.15/24 brd 10.0.2.255 scope global noprefixroute dynamic eth0

valid_lft 85523sec preferred_lft 85523sec

inet6 fe80::5054:ff:fe4d:77d3/64 scope link

valid_lft forever preferred_lft forever

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 08:00:27:f4:08:54 brd ff:ff:ff:ff:ff:ff

inet 192.168.200.201/24 brd 192.168.200.255 scope global noprefixroute eth1

valid_lft forever preferred_lft forever

inet6 fe80::a00:27ff:fef4:854/64 scope link

valid_lft forever preferred_lft forever

[vagrant@ha-loadbalancer ~]$ cat /etc/system-release

CentOS Linux release 7.8.2003 (Core)

All connections using Vagrant are via the 1st interface on the guest machine which is always a NAT input. The vagrant ssh command makes use of the internal keys that are created as part of the build and copied to the guest.

It is simple to check http on 192.168.200.201 multiple times to show that the page from each server is displayed. This can be done by using a normal browser on the Host machine or curl.

salterje@salterje-PC-X008778:~$ curl 192.168.200.201

<h1>This is nginx server 1</h1>

salterje@salterje-PC-X008778:~$ curl 192.168.200.201

<h1>This is nginx server 2</h1>

It can be seen that the connection will be balanced between the two NGINX servers equally as seen by the message coming back.

Conclusions

Vagrant is a very powerful piece of software that is great for building infrastructure for development and testing purposes. It allows everything to be defined in a single directory with potentially a single configuration file. This file can be used to construct multiple guest machines on the Host with all relevant networking between them.

It has lots of features that can potentially save lots of time such as automatic generation of ssh keys and easy connection to the created Virtual Machines. As well as allowing the use of shell scripts to set the Virtual Machines, as used in this lab, it also allows other provisioners to be used such as Ansible.

HAProxy can be used for a simple Load Balancer with minimal configuration. In this case we have used a pair of other Virtual Machines but this can also be used with a Kubernetes cluster to send traffic to the Nodes within the cluster.

The basic files for this lab can be downloaded onto any machine running VirtualBox and Vagrant to reconstruct the Lab. Once the Lab is finished the machines can be wiped from the machine with the knowledge that a simple command can be run to re-build the Lab.