Best practice dictates that we should not store data within a container as by their nature they are empheral. At any point the container may be destroyed and hopefully a replacement created using a suitable object such as a ReplicaSet. This would still mean all data within the container will be lost.

There are various ways of providing persistent storage within Kubernetes but this post will look at the use of NFS. This is fairly simple to set up for a basic proof of concept and may be done within a cloud based cluster or a self-hosted one.

We are going to use an NFS server that will be setup on the master node and mount it into a volume within a POD running on one of the Nodes.

Setting up NFS on the Master and Worker Nodes

The NFS server will be installed on our Master Node, which is running Ubuntu 18.04 in this case.

sudo apt-get update

sudo apt-get install -y nfs-kernel-server

We'll also need to create a directory which will be mounted within the container.

sudo mkdir -p /home/salterje/My-NFS/my-website

#Change permissions on created directory

sudo chmod 1777 /home/salterje/My-NFS/my-website/

Within the created directory we'll create a test index.html page that we'll use to provide content for an NGINX container running in our cluster.

Once this is created the NFS server needs to be configured to share the created directory. We'll do a quick and dirty config to allow this.

# Add the following line into /etc/exports

sudo vi /etc/exports

/home/salterje/My-NFS/my-website *(rw,sync,no_root_squash,subtree_check)

# Re-read the /etc/exports

sudo exportfs -ra

We'll test that things are working by mounting the newly created NFS resource from one of the Nodes within the cluster.

# Install the NFS client on one of the nodes

sudo apt-get install -y install nfs-common

# Check the mount (k8s-master must be reachable via DNS, easiest way is to modify /etc/hosts)

showmount -e k8s-master

Export list for k8s-master:

/home/salterje/My-NFS/my-website *

# Mount the NFS

sudo mount k8s-master:/home/salterje/My-NFS/my-website /mnt

If the configuration we have created has worked we should have mounted the NFS directory on the master node to the /mnt directory on the 1st Node.

Running ls and cat on the resulting file will prove this.

ls -lh /mnt

total 4.0K

-rw-rw-r-- 1 salterje salterje 94 Jul 23 17:04 index.html

cat /mnt/index.html

<h1>This is a Test Page</h1>

<p>If everything works this will replace the nginx test page</p>

That is great and gives a suitable platform to try to mount the volume into a POD within our cluster. The NFS client should be installed on all the worker nodes to allow the use of NFS into the Nodes within the cluster.

Setting Up a Persistent Volume

A persistent volume is an area of storage that is independent from PODs and provides a layer of abstraction between Kubernetes and a wide range of storage types. In our case we will set it up using NFS, but there are suitable plugins for a wide range of solutions.

We'll write a manifest file to create a PV which will link to the NFS server that we have already set up on the master. This will define where the NFS server is and the directory that will be shared.

apiVersion: v1

kind: PersistentVolume

metadata:

name: my-pv-vol

spec:

capacity:

storage: 500Mi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

nfs:

path: /home/salterje/My-NFS/my-website

server: k8s-master

readOnly: false

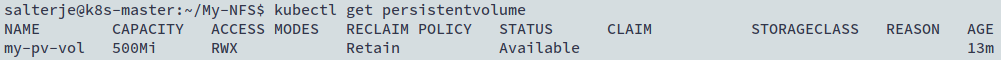

We can verify the PV has been created

kubectl get persistentvolume

Creating a Persistent Volume Claim

Now that we have the created Persistent Volume we need to create a Persistent Volume Claim to make use of it.

We'll create the YAML manifest file.

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: my-pvc-claim

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 200Mi

As per normal we'll create the PVC object by applying the YAML.

kubectl apply -f my-pvc-claim.yaml

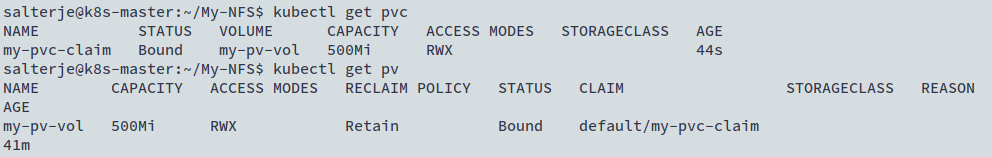

We can then check the PVC has been created and also that it has made a claim on the PV that was previously created.

kubectl get pvc

kubectl get pv

Creating a Deployment to Use our Volumes

We've got the ground work done with setting up NFS on the master node, creating a PV and binding a PVC to it. We now need to create a suitable deployment which will create an NGINX POD that will use the storage.

We'll create a suitable Deployment YAML file.

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-nfs

namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: my-nginx-nfs

template:

metadata:

labels:

app: my-nginx-nfs

spec:

containers:

- image: nginx

imagePullPolicy: Always

name: nginx-nfs-container

volumeMounts:

- name: nfs-vol

mountPath: /usr/share/nginx/html

ports:

- containerPort: 80

protocol: TCP

volumes:

- name: nfs-vol

persistentVolumeClaim:

claimName: my-pvc-claim

The important areas of the YAML are the volumeMount section of the container which defines where to mount the volume within the container and links it to the actual volume. It is very important that the names match to make the link.

The claimName field within the volume links back to the PVC object that we have running already within the cluster.

The manifest file will create a deployment that will create a self-healing POD that has port 80 open on it. The test index.html, which is actually saved on the Master Node, will be mounted in place of the normal NGINX test page.

The YAML file just needs to be applied.

kubectl apply -f my-nginx-nfs.yaml

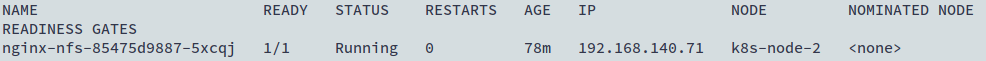

We can then find the IP address of the POD that has been created, along with which Node it has been created on by applying -o wide option on kubectl get pods.

kubectl get pods -o wide

This reveals that our POD has been deployed on Node 2 of the cluster and been allocated an IP address of 192.168.140.71.

We'll now log onto that Node to check what the POD is serving.

salterje@k8s-node-2:~$ curl 192.168.140.71

<h1>This is a Test Page</h1>

<p>If everything works this will replace the nginx test page</p>

It can be seen that the NGINX container within our POD has taken it's index.html page from the file that is being served via the NFS server running on our master node.

If we were to shutdown the POD, it will be re-created and the data will still be present.

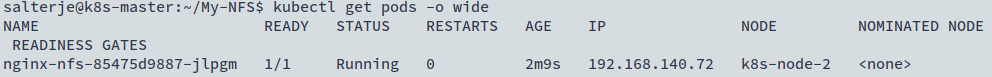

salterje@k8s-master:~/My-NFS$ kubectl delete pod nginx-nfs-85475d9887-5xcqj

pod "nginx-nfs-85475d9887-5xcqj" deleted

We will see that the new POD has a different name and a new IP address.

We can log onto Node 2 and confirm that the new POD is still serving the content.

salterje@k8s-node-2:~$ curl 192.168.140.72

<h1>This is a Test Page</h1>

<p>If everything works this will replace the nginx test page</p>

The final test will be to make a modification on the file being served on the Master.

salterje@k8s-master:~/My-NFS$ vi /home/salterje/My-NFS/my-website/index.html

Another quick check on the POD show

salterje@k8s-node-2:~$ curl 192.168.140.72

<h1>This is a Test Page</h1>

<p>If everything works this will replace the nginx test page</p>

<p>This is a change that has been made on the Master Node</p>

Of course it can be seen that the IP address of the new POD has been changed. This is an issue that we will look at in a different post. For now we have proven the functionality by finding the new IP address and checking it's contents within the cluster.

Conclusions

This Post is an introduction to giving containers running in a cluster access to persistent storage. This is necessary as all containers are stateless by nature so any data stored on them will be lost when they are shutdown.

We have used NFS in this example but the same principles can be applied for a range of different storage solutions but the way that the Deployment is created remains the same.

The actual VolumeMount and Volumes are built in a similar way to mounting ConfigMaps within a POD. For more details see here

The confirmation of the contents of the POD has been carried out within the cluster by direct connection onto the POD itself. This is of limited value if access is required from outside the cluster and we'll look at that in another post.