In normal operation Kubernetes will allow all PODs within a cluster to communicate, meaning that all ingress and egress traffic will be allowed. Network policies allow rules to be written that will define how groups of PODs can communicate with one another as well as services.

The actual implementation of the Networking Policy is implemented by the running network option that has been used within the cluster. This means that consideration must be made in choosing a suitable network model.

Basic Setup

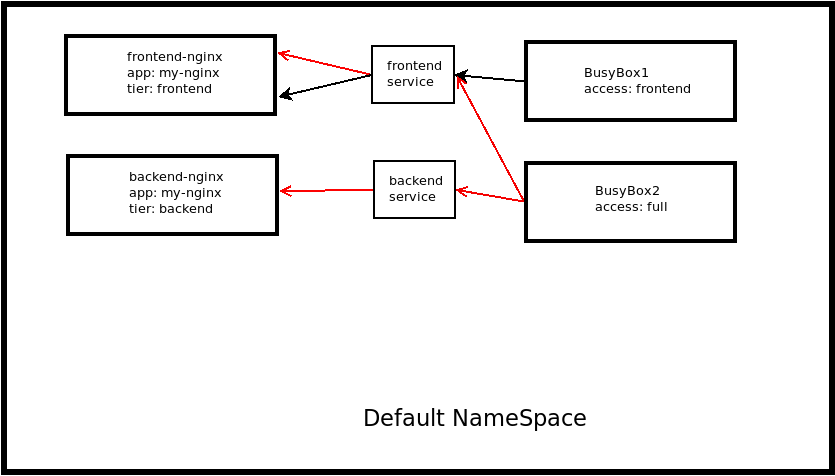

To show the impact of Network Policies we are going to set up two NGINX Deployments, one will be labelled tier:frontend and the other will be labelled as tier:backend. The PODs will be run in the default namespace as part of the Deployment, each having a single Replica.

We'll also add two Busybox PODs, with one ultimately having connection just to the frontend and the other will have connection to both NGINX Deployments. The aim will be to have isolation of these two Busybox PODs so they cannot connect to each other.

To enable full connection between the PODs we'll have to also setup some services to ensure that the PODs are reachable even if they are deleted and re-created.

The network plugin that is in use on the cluster is Calico which supports the use of Network Policies. Initially we'll test connectivity without any Network Policy to prove that all PODs will be able to see one another and then implement the policies.

Setting Up PODs

The PODs are quite simple with the following Deployment YAML manifests. We are going to change the default page served by NGINX to show which POD is serving the index.html. This is done by mounting a ConfigMap within the container to change the default test page.

We will have the 2 NGINX Deployments running, with a single Replica, suitable labels and a ConfigMap mounted to give a meaningful index.html page.

vagrant@k8s-master:~$ cat my-nginx-backend.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: my-nginx

tier: backend

name: backend-nginx

namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: my-nginx

tier: backend

template:

metadata:

labels:

app: my-nginx

tier: backend

spec:

containers:

- image: nginx

imagePullPolicy: Never

name: nginx

ports:

- containerPort: 80

volumeMounts:

- name: index-configmap

mountPath: /usr/share/nginx/html

volumes:

- name: index-configmap

configMap:

name: backend

vagrant@k8s-master:~$ cat my-nginx-frontend.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: my-nginx

tier: frontend

name: frontend-nginx

namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: my-nginx

tier: frontend

template:

metadata:

labels:

app: my-nginx

tier: frontend

spec:

containers:

- image: nginx

imagePullPolicy: Always

name: nginx

ports:

- containerPort: 80

volumeMounts:

- name: index-configmap

mountPath: /usr/share/nginx/html

volumes:

- name: index-configmap

configMap:

name: frontend

There will also be two BusyBox PODs that are going to be used to check connectivity to the NGINX PODs. Again we will use Deployments to enable these PODs to be self-healing, with a single Replica of each.

vagrant@k8s-master:~$ cat Busybox1.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: busybox1

labels:

app: my-busybox

access: frontend

spec:

replicas: 1

selector:

matchLabels:

app: my-busybox

access: frontend

template:

metadata:

labels:

app: my-busybox

access: frontend

spec:

containers:

- name: mytest-alpine

image: alpine

command:

- sleep

- "3600"

vagrant@k8s-master:~$ cat Busybox2.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: busybox2

labels:

app: my-nginx

access: full

spec:

replicas: 1

selector:

matchLabels:

app: my-nginx

access: full

template:

metadata:

labels:

app: my-nginx

access: full

spec:

containers:

- name: mytest-alpine

image: alpine

command:

- sleep

- "3600"

Two Services have been setup with suitable selectors to enable easy access to the NGINX PODs. Each one will connect to the appropriate PODs created as part of the NGINX deployments.

vagrant@k8s-master:~$ cat service-frontend.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app: my-nginx

name: nginx-frontend-service

namespace: default

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: my-nginx

tier: frontend

sessionAffinity: None

type: ClusterIP

vagrant@k8s-master:~$ cat service-backend.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app: my-nginx

name: nginx-backend-service

namespace: default

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: my-nginx

tier: backend

sessionAffinity: None

type: ClusterIP

There are also a couple of ConfigMaps as part of the setup which are purely used to mount a meaningful test page for each of the NGINX PODs.

vagrant@k8s-master:~$ kubectl describe configmaps frontend

Name: frontend

Namespace: default

Labels: <none>

Annotations: <none>

Data

====

index.html:

----

<h1>This is the FrontEnd</h1>

Events: <none>

vagrant@k8s-master:~$ kubectl describe configmaps backend

Name: backend

Namespace: default

Labels: <none>

Annotations: <none>

Data

====

index.html:

----

<h1>This is the backend</h1>

Events: <none>

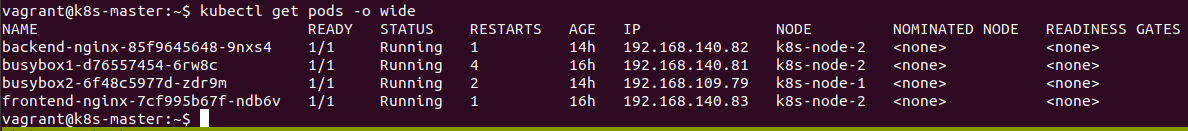

Testing Connectivity

This Setup allows the proving of connectivity between the PODs running within the cluster. To check this we'll find the IP addresses of all PODs within the cluster and start an interactive terminal on each of the BusyBox PODs.

vagrant@k8s-master:~$ kubectl exec -it busybox1-d76557454-6rw8c -- /bin/sh

/ # hostname

busybox1-d76557454-6rw8c

/ # ping 192.168.140.82

PING 192.168.140.82 (192.168.140.82): 56 data bytes

64 bytes from 192.168.140.82: seq=0 ttl=63 time=0.138 ms

64 bytes from 192.168.140.82: seq=1 ttl=63 time=0.174 ms

^C

--- 192.168.140.82 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 0.138/0.156/0.174 ms

/ # ping 192.168.140.81

PING 192.168.140.81 (192.168.140.81): 56 data bytes

64 bytes from 192.168.140.81: seq=0 ttl=64 time=0.067 ms

64 bytes from 192.168.140.81: seq=1 ttl=64 time=0.062 ms

^C

--- 192.168.140.81 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 0.062/0.064/0.067 ms

/ # ping 192.168.109.79

PING 192.168.109.79 (192.168.109.79): 56 data bytes

64 bytes from 192.168.109.79: seq=0 ttl=62 time=1.299 ms

64 bytes from 192.168.109.79: seq=1 ttl=62 time=1.110 ms

^C

--- 192.168.109.79 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 1.110/1.204/1.299 ms

/ # ping 192.168.109.83

PING 192.168.109.83 (192.168.109.83): 56 data bytes

^C

--- 192.168.109.83 ping statistics ---

2 packets transmitted, 0 packets received, 100% packet loss

/ #

This shows that the BusyBox POD has full connectivity to all the other PODs.

We can also prove that it is able to connect to the NGINX PODs via the two created services. This is done using the DNS entry for the service which will connect to the EndPoints of the selected PODs.

/ # wget -qO- nginx-frontend-service

<h1>This is the FrontEnd</h1>

/ # wget -qO- nginx-backend-service

<h1>This is the backend</h1>

The same tests can be tried on the other BusyBox POD with the same results.

This shows that we have full connectivity between all PODs but what we would like is to restrict this to just the connectivity that we want.

- BusyBox 1 will only have connectivity to the frontend-nginx POD

- BusyBox 2 will have connectivity to both NGINX Deployments

- The BusyBox PODs will not have connectivity with one another

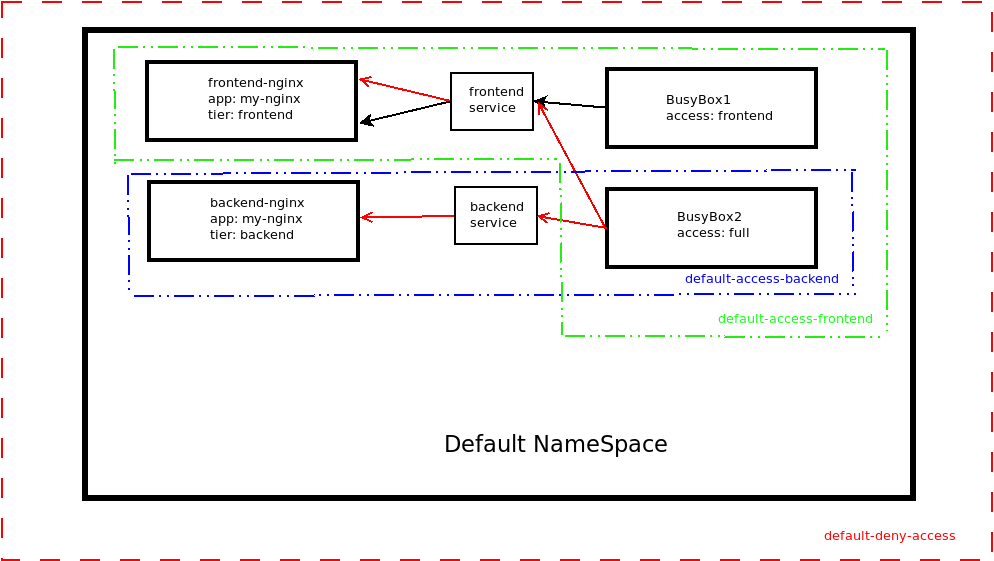

Setting up Network Policies within the Default Namespace

We will implement some Network Policies to restrict inter-POD connectivity within the Default Namespace while still allowing the access that we would like.

Removing all Access

The first policy that will be set is to remove all access between PODs in the Default Namespace.

vagrant@k8s-master:~$ cat network-policy-implicit-deny.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: default-deny-ingress

namespace: default

spec:

podSelector: {}

policyTypes:

- Ingress

The {} within the podSelector section means that the policy will be applied to all PODs and the policyType shows that it applies to all ingress to all PODs within the Namespace.

As soon as this is applied we lose all connections between the PODs (including via the services that have been created).

This is shown by a repeat of the tests carried out from the BusyBox POD.

/ # wget -qO- nginx-backend-service

^C

/ # wget -qO- -t=1 nginx-backend-service

^C

/ # wget -qO- --timeout=5 nginx-backend-service

wget: download timed out

/ # wget -qO- --timeout=5 nginx-frontend-service

wget: download timed out

/ # hostname

busybox1-d76557454-6rw8c

/ # ping -c 2 192.168.140.82

PING 192.168.140.82 (192.168.140.82): 56 data bytes

^C

--- 192.168.140.82 ping statistics ---

2 packets transmitted, 0 packets received, 100% packet loss

/ # ping -c 2 192.168.140.83

PING 192.168.140.83 (192.168.140.83): 56 data bytes

^C

--- 192.168.140.83 ping statistics ---

2 packets transmitted, 0 packets received, 100% packet loss

/ # ping -c 2 192.168.109.79

PING 192.168.109.79 (192.168.109.79): 56 data bytes

^C

--- 192.168.109.79 ping statistics ---

2 packets transmitted, 0 packets received, 100% packet loss

The same tests can also be run from the other PODs with the same results.

We must now apply some policies to allow the connections that we want.

New Policy

We have applied a blanket policy to stop all inter-POD communication within the default Namespace and proven it works. The next stage is to write a policy that will allow:

-

Connection to frontend-nginx Deployment from all PODs that have a label of access: frontend and access: full

-

Connection to backend-nginx Deployment from just PODs that have a label of access: full

-

No communication between the two BusyBox PODs

The following Network-Policy will enable this:

vagrant@k8s-master:~$ cat network-policy-access-frontend.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: default-access-frontend

namespace: default

spec:

podSelector:

matchLabels:

app: my-nginx

tier: frontend

ingress:

- from:

- podSelector:

matchLabels:

access: "frontend"

- from:

- podSelector:

matchLabels:

access: "full"

vagrant@k8s-master:~$ cat network-policy-access-backend.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: default-access-backend

namespace: default

spec:

podSelector:

matchLabels:

app: my-nginx

tier: backend

ingress:

- from:

- podSelector:

matchLabels:

access: "full"

We can check the polices that are running:

vagrant@k8s-master:~$ kubectl get networkpolicies.networking.k8s.io

NAME POD-SELECTOR AGE

default-access-backend app=my-nginx,tier=backend 3m24s

default-access-frontend app=my-nginx,tier=frontend 21m

default-deny-ingress <none> 3h4m

We can also get more detail of the policies.

vagrant@k8s-master:~$ kubectl describe networkpolicies.networking.k8s.io default-access-backend

Name: default-access-backend

Namespace: default

Created on: 2020-08-14 16:34:35 +0000 UTC

Labels: <none>

Annotations: Spec:

PodSelector: app=my-nginx,tier=backend

Allowing ingress traffic:

To Port: <any> (traffic allowed to all ports)

From:

PodSelector: access=full

Not affecting egress traffic

Policy Types: Ingress

vagrant@k8s-master:~$ kubectl describe networkpolicies.networking.k8s.io default-access-frontend

Name: default-access-frontend

Namespace: default

Created on: 2020-08-14 16:16:32 +0000 UTC

Labels: <none>

Annotations: Spec:

PodSelector: app=my-nginx,tier=frontend

Allowing ingress traffic:

To Port: <any> (traffic allowed to all ports)

From:

PodSelector: access=frontend

----------

To Port: <any> (traffic allowed to all ports)

From:

PodSelector: access=full

Not affecting egress traffic

Policy Types: Ingress

vagrant@k8s-master:~$ kubectl describe networkpolicies.networking.k8s.io default-deny-ingress

Name: default-deny-ingress

Namespace: default

Created on: 2020-08-14 13:33:28 +0000 UTC

Labels: <none>

Annotations: Spec:

PodSelector: <none> (Allowing the specific traffic to all pods in this namespace)

Allowing ingress traffic:

<none> (Selected pods are isolated for ingress connectivity)

Not affecting egress traffic

Policy Types: Ingress

The final stage is to check the connections from both BusyBox PODs to ensure the connectivity is what we can expect.

From Busybox1 we should only be able to get to the frontend NGINX and not be able to ping the other BusyBox POD:

/ # hostname

busybox1-d76557454-6rw8c

/ # ping 192.168.109.80 # busybox2

PING 192.168.109.80 (192.168.109.80): 56 data bytes

^C

--- 192.168.109.80 ping statistics ---

3 packets transmitted, 0 packets received, 100% packet loss

/ # wget -qO- --timeout=2 nginx-frontend-service

<h1>This is the FrontEnd</h1>

/ # wget -qO- --timeout=2 nginx-backend-service

wget: download timed out

From Busybox2 we should be able to get to both NGINX Deployments and not be able to ping the other BusyBox POD:

/ # hostname

busybox2-68b44589bf-hx7hm

/ # ping 192.168.140.81 # busybox1

PING 192.168.140.81 (192.168.140.81): 56 data bytes

^C

--- 192.168.140.81 ping statistics ---

3 packets transmitted, 0 packets received, 100% packet loss

/ # wget -qO- --timeout=2 nginx-frontend-service

<h1>This is the FrontEnd</h1>

/ # wget -qO- --timeout=2 nginx-backend-service

<h1>This is the backend</h1>

/ #

/ #

We have now restricted the traffic flow within the NameSpace so that PODs cannot communicate unless they are explicitly allowed to. The connectivity has been proven from within the Test BusyBox PODs themselves.

This has been a relatively simple policy that has only been implemented on ingress into the PODs and we have not filtered further on ports. We have also only implemented it with the POD selector option and only implemented it within a single NameSpace.

Conclusions

The use of Network Policies within Kubernetes is another tool that can be used to provide another layer of security but by default all PODs within the cluster are able to communicate with each other.

One of the interesting points of how this functionality is implemented is that there is no option to have an explicit deny statement within the configuration. There is only the option to have an allow statement and if any of the statements matches the traffic is allowed.

A connection will be allowed if it is part of any network policy that has been implemented, within the NameSpace. This would mean that even if traffic is blocked in one policy it will be allowed if another policy is applied. This should be considered as other security mechanisms will deny traffic if it is mentioned in any policy.

The examples shown are fairly simple but policies can become very complex and like all systems the behaviour should be checked to ensure that it performs as expected.

It should also be noted that the work is done by the CNI used within the cluster so the choice of the cluster networking solution is important.