The normal Kubernetes scheduler will select a suitable Node within the cluster but it is possible to manually schedule PODs on particular Nodes. This is useful if a particular application needs to be run on particular hardware or there are reasons that it should be deployed on particular servers.

In this post we have a simple cluster that has two worker nodes k8s-node-1 and k8s-node-2 and we'll use various ways of manually ensuring that our PODs are scheduled on a particular node.

NodeName

The simplest way of manually scheduling a POD is using the NodeName. This is part of the template spec within a Deployment and makes a simple link to the Node name.

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: nodeName-nginx

name: nodename-nginx

namespace: test

spec:

replicas: 3

selector:

matchLabels:

app: nodeName-nginx

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app: nodeName-nginx

spec:

nodeName: k8s-node-2 # This Line will select node

containers:

- image: nginx

name: nginx

volumeMounts:

- name: indexpage

mountPath: /usr/share/nginx/html

volumes:

- name: indexpage

configMap:

name: nodename

This is another simple NGINX deployment which has a configMap mounted to provide a suitable index page.

vagrant@k8s-master:~/MyProject$ kubectl describe configmaps nodename --namespace=test

Name: nodename

Namespace: test

Labels: <none>

Annotations:

Data

====

index.html:

----

<h1>This is nginx-1</h1>

<p>This has been put on a node selected by the Nodename</p>

Events: <none>

We'll expose the Deployment with a NodePort.

apiVersion: v1

kind: Service

metadata:

creationTimestamp: null

labels:

app: nodeName-nginx

name: nodename-nginx

namespace: test

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: nodeName-nginx

type: NodePort

In a normal Deployment the PODs will be distributed equally over all Nodes (in our cluster's case all Nodes have been built in the same way, with the same CPU and memory capacity).

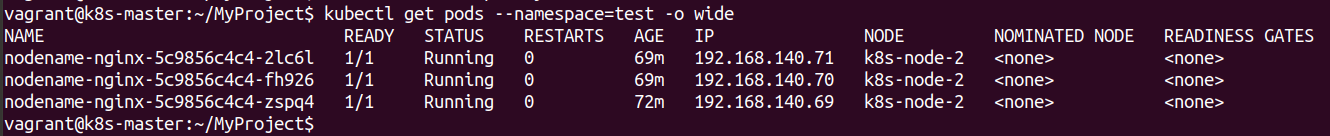

As we have used the nodeName in the template all the PODs are deployed on the same Node.

kubectl get pods --namespace=test -o wide

NodeSelector

With a NodeSelector we can assign our own labels to the Nodes within a cluster and use these to select where to run a POD.

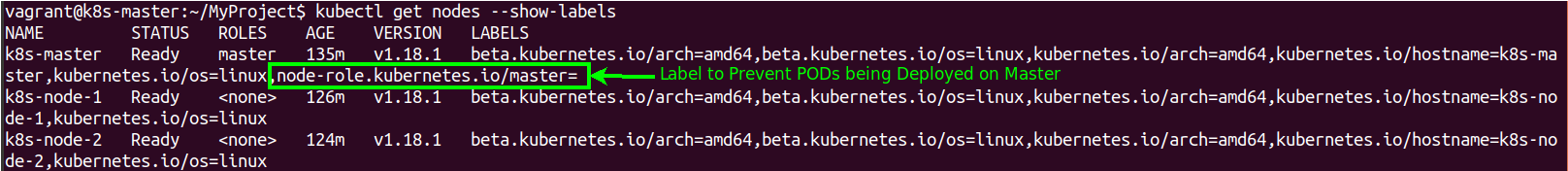

To begin with we'll look at how our cluster Nodes are labelled by default.

kubectl get nodes --show-labels

The most significant label is one that is assigned automatically to the master Node. In normal operation working PODs are not run on the master Nodes and this is done by the node-role.kubernetes.io/master= label.

For our purpose we will not deploy our working PODs on the master but will add a label onto each of the worker Nodes.

kubectl label nodes k8s-node-1 colour=red

kubectl label nodes k8s-node-2 colour=blue

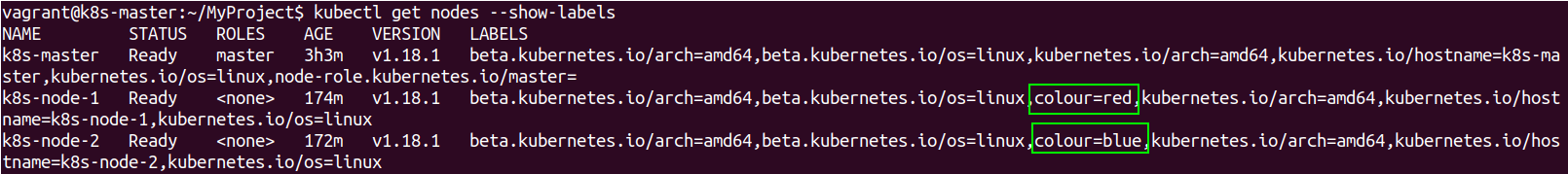

kubectl get nodes --show-labels

We now have our labels on the Nodes and will deploy some more PODs but have them just run on the Node that has a Red label. In this case this will just be a single Node but in a larger cluster this could be a number of Nodes we would be happy for the PODs to be run on.

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: nodeselector-nginx

name: nodeselector-nginx

namespace: test

spec:

replicas: 3

selector:

matchLabels:

app: nodeselector-nginx

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app: nodeselector-nginx

spec:

containers:

- image: nginx

name: nginx

volumeMounts:

- name: indexpage

mountPath: /usr/share/nginx/html

nodeSelector: ## This is the nodeSelector

colour: red ## This is the label for the Node

volumes:

- name: indexpage

configMap:

name: nodeselector

The Deployment manifest is slightly different as we are no longer relying on the Node name but a nodeSelector which has been set to the value of colour:red

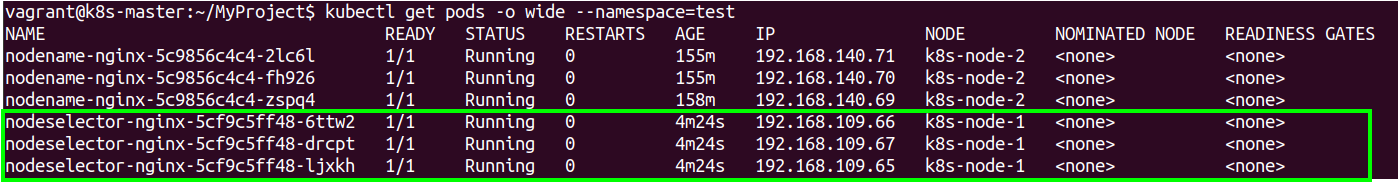

We can then confirm our PODs are running on the desired Node in the normal way

kubectl get pods -o wide --namespace=test

We have confirmed that our second deployment has all 3 replicas running on the correct Node (k8s-node-1, which we had previously labelled colour=red). Of course we could now spray paint a physical server red but this would probably be taking things a little far.

This ability to link the deployments to labels that can be easily changed on Nodes does allow a lot of flexibility on where applications are run within a cluster. In a physical on-premises cluster this may allow easier maintenance as applications are moved from hardware that can then be modified or worked on.

NodeAffinity

Our last method is again going to make use of the labels that we have put on the Nodes. NodeAffinity is similar to the nodeSelector method but allows slightly more nuance and the ability to have a soft/preference option rather then a hard selector.

The options that can be used are also more expressive then a simple yes/no operator.

We'll set up another deployment of 3 NGINX replicas which will run on our Node with a colour:blue (k8s-node-2).

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: nodeaffinity-nginx

name: nodeaffinity-nginx

namespace: test

spec:

replicas: 3

selector:

matchLabels:

app: nodeaffinity-nginx

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app: nodeaffinity-nginx

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: colour

operator: In

values:

- blue

containers:

- image: nginx

name: nginx

volumeMounts:

- name: indexpage

mountPath: /usr/share/nginx/html

volumes:

- name: indexpage

configMap:

name: nodeaffinity

It can be seen that there is quite a large affinity section within the spec of the template. This section would be used for all affinity rules being implemented, which can also include affinity rules linked to the placement of other PODs within the cluster as well as Nodes. This can also include anti-affinity rules as well, meaning that the placement of PODs could be kept away from certain Nodes or PODs.

In this example we are keeping things simple by matching the values colour:blue within a Node label.

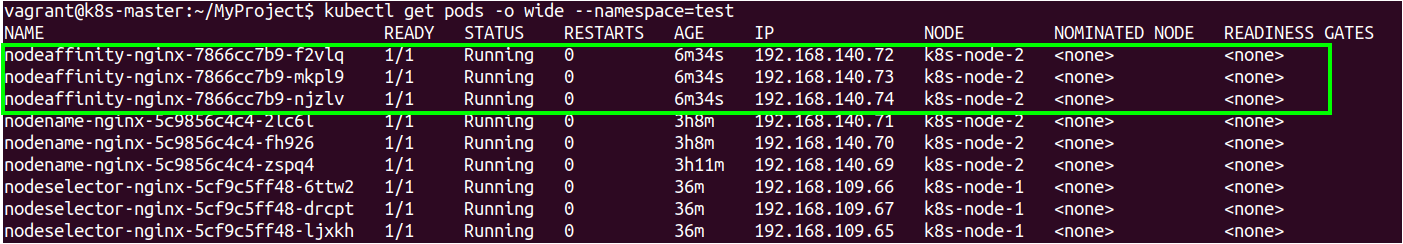

Once we run the Deployment we can confirm that the new PODs have been put onto the correct Node.

kubectl get pods -o wide --namespace=test

We can confirm that the nodeaffinity PODs have all ended up on the same Node which has the colour:blue label.

Conclusions

This post has run through the basic ways that the kubernetes scheduler can be over-ridden to allow applications to be deployed on particular Nodes within the cluster.

The simplest, but most inflexible way, is to make use of the nodeName within the POD spec. This is simple to do but does mean that it is linked directly to the Node's name which is not easily changed once it has been deployed.

The second method makes use of the user-defined labels that can be easily added and removed from Nodes and uses the nodeSelector option. This is another easy option and is probably a better method then the cruder nodeName method.

The final method makes use of affinitys, of which nodeAffinity is just one option. This gives most flexibility and can be used to construct quite complex rules on where to run PODs (something we didn't make use of in this post).

The manual over-riding of the scheduler should be carefully thought about as it is very possible to get into trouble with unexpected results. It however is a powerful tool that can be made use of to maximise resources and performance.

The use of labels to influence behaviour is generally much more preferred should there be a requirement to manipulate the placement of PODs within the cluster.