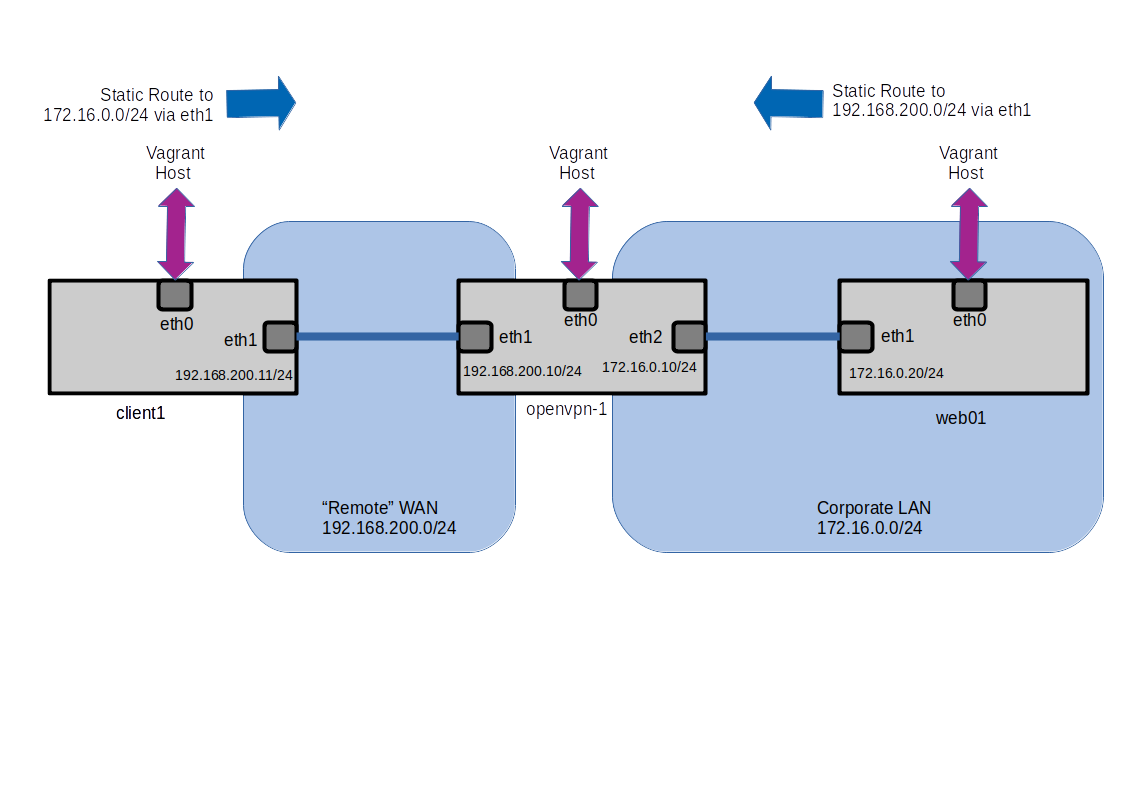

OpenVPN is a popular opensource VPN solution and in this post we'll set up a Lab with a Virtual Machine acting as a gateway to securely access a Web Server running on the 172.16.0.0/24 network from a client. The lab will show the concepts of creating a VPN tunnel running over the 192.168.200.0/24 link which we'll route traffic through to the end server.

Key to this will be the creation of suitable routes to ensure the traffic goes via the tunnel and not over the underlay network. We will also see the source address of the request from the client being modified by OpenVPN.

Setting up Basic Lab

The Lab consists of 3 Virtual Machines running under VirtualBox which have been created with a suitable Vagrantfile. The Vagrantfile has some simple shell provisioning scripts to create static routes and in the case of the OpenVPN server grab the installation script that will be used for the setup for the server.

The provisioning also sets up an Nginx instance that will be used as a test target to check the connection to a remote server and pull back http traffic over the VPN tunnel.

The scripts can be found at https://github.com/salterje/openvpn-vagrant

Checking Things Before OpenVPN

Because of the way that Vagrant always creates a default gateway on eth0 the provisioning scripts have created some static routes to ensure that all communication between the web server and the client take place through the OpenVPN machine, rather then through the Host.

In addition the OpenVPN machine has been provisioned to allow ip forwarding between it's interfaces, ensuring that the web server and client traffic can be forwarded between it's eth1 and eth2 interfaces.

This can be confirmed by checking the status of the ip_forward bit within /proc/sys/net/ipv4/ip_forward and also confirming the routing table.

vagrant@openvpn-1:~$ cat /proc/sys/net/ipv4/ip_forward

1

vagrant@openvpn-1:~$ ip route

default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15

10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

172.16.0.0/24 dev eth2 proto kernel scope link src 172.16.0.10

192.168.200.0/24 dev eth1 proto kernel scope link src 192.168.200.10

The routing tables can also be confirmed on the two other machines.

vagrant@client-1:~$ ip route

default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15

10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

172.16.0.0/24 via 192.168.200.10 dev eth1 proto static

192.168.200.0/24 dev eth1 proto kernel scope link src 192.168.200.11

vagrant@web-1:~$ ip route

default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15

10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

172.16.0.0/24 dev eth1 proto kernel scope link src 172.16.0.20

192.168.200.0/24 via 172.16.0.10 dev eth1 proto static

If all is working it should be possible to make a connection between the client and web server through the OpenVPN machine and pull back the test page being served by Nginx.

vagrant@client-1:~$ tracepath -n 172.16.0.20

1?: [LOCALHOST] pmtu 1500

1: 192.168.200.10 0.393ms

1: 192.168.200.10 0.240ms

2: 172.16.0.20 0.454ms reached

Resume: pmtu 1500 hops 2 back 2

vagrant@client-1:~$ curl 172.16.0.20

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

This confirms that the client can get the content from the web server and all traffic is routed through the server in the middle rather then via the underlying Host.

As a final test we will use tcpdump to check the traffic going into eth1 and eth2 interfaces of the server in the middle by pinging from the client to the web server.

vagrant@client-1:~$ ping -s 200 172.16.0.20

PING 172.16.0.20 (172.16.0.20) 200(228) bytes of data.

208 bytes from 172.16.0.20: icmp_seq=1 ttl=63 time=1.43 ms

208 bytes from 172.16.0.20: icmp_seq=2 ttl=63 time=1.46 ms

208 bytes from 172.16.0.20: icmp_seq=3 ttl=63 time=0.573 ms

208 bytes from 172.16.0.20: icmp_seq=4 ttl=63 time=1.56 ms

vagrant@openvpn-1:~$ sudo tcpdump -i eth1 icmp

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on eth1, link-type EN10MB (Ethernet), capture size 262144 bytes

21:37:34.740360 IP 192.168.200.11 > 172.16.0.20: ICMP echo request, id 2, seq 1, length 208

21:37:34.741099 IP 172.16.0.20 > 192.168.200.11: ICMP echo reply, id 2, seq 1, length 208

21:37:35.741898 IP 192.168.200.11 > 172.16.0.20: ICMP echo request, id 2, seq 2, length 208

21:37:35.742631 IP 172.16.0.20 > 192.168.200.11: ICMP echo reply, id 2, seq 2, length 208

21:37:36.743809 IP 192.168.200.11 > 172.16.0.20: ICMP echo request, id 2, seq 3, length 208

vagrant@openvpn-1:~$ sudo tcpdump -i eth2 icmp

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on eth2, link-type EN10MB (Ethernet), capture size 262144 bytes

21:38:46.277016 IP 192.168.200.11 > 172.16.0.20: ICMP echo request, id 3, seq 1, length 208

21:38:46.277287 IP 172.16.0.20 > 192.168.200.11: ICMP echo reply, id 3, seq 1, length 208

21:38:47.286199 IP 192.168.200.11 > 172.16.0.20: ICMP echo request, id 3, seq 2, length 208

21:38:47.286862 IP 172.16.0.20 > 192.168.200.11: ICMP echo reply, id 3, seq 2, length 208

21:38:48.287739 IP 192.168.200.11 > 172.16.0.20: ICMP echo request, id 3, seq 3, length 208

This proves that the ICMP traffic is being sent through the server in the middle from the client eth1 interface at 192.168.200.11 to the web server eth1 interface at 172.16.0.20.

It is now time to install OpenVPN on the server in the middle and create a VPN tunnel using the link between 192.168.200.11 on the client to 192.168.200.10 on the server in the middle. The aim will be to encrypt all traffic leaving the client heading to the web server.

Installing OpenVPN Server

Setting up a basic OpenVPN Server on Ubuntu can be done by downloading the appropriate installation script, making it executable and running it.

wget https://git.io/vpn -O openvpn-ubuntu-install.sh

chmod -v +x openvpn-ubuntu-install.sh

sudo ./openvpn-ubuntu-install.sh

The script can be run and some parameters entered which will create the necessary setup on the server. It will also create the necessary openVPN client configuration.

Welcome to this OpenVPN road warrior installer!

Which IPv4 address should be used?

1) 10.0.2.15

2) 192.168.200.10

3) 172.16.0.10

IPv4 address [1]: 2

This server is behind NAT. What is the public IPv4 address or hostname?

Public IPv4 address / hostname [151.230.176.38]: 192.168.200.10

Which protocol should OpenVPN use?

1) UDP (recommended)

2) TCP

Protocol [1]:

What port should OpenVPN listen to?

Port [1194]:

Select a DNS server for the clients:

1) Current system resolvers

2) Google

3) 1.1.1.1

4) OpenDNS

5) Quad9

6) AdGuard

DNS server [1]:

DNS server [1]:

Enter a name for the first client:

Name [client]:

OpenVPN installation is ready to begin.

Press any key to continue...

The client configuration is available in: /root/client.ovpn

New clients can be added by running this script again.

vagrant@openvpn-1:~$

The necessary client.ovpn file can now be copied to the client machine via scp.

It should be noted that in this particular lab we have kept everything local and are using the IP addresses of our Virtual Machines. The aim is to ensure that the traffic that goes between over the 192.168.200.0/24 network between the Virtual Machines is protected.

The installer has actually set up a new tunnel interface and made adjustments to the route table of the OpenVPN server.

vagrant@openvpn-1:~$ ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 08:00:27:14:86:db brd ff:ff:ff:ff:ff:ff

inet 10.0.2.15/24 brd 10.0.2.255 scope global dynamic eth0

valid_lft 76475sec preferred_lft 76475sec

inet6 fe80::a00:27ff:fe14:86db/64 scope link

valid_lft forever preferred_lft forever

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 08:00:27:3e:f5:ae brd ff:ff:ff:ff:ff:ff

inet 192.168.200.10/24 brd 192.168.200.255 scope global eth1

valid_lft forever preferred_lft forever

inet6 fe80::a00:27ff:fe3e:f5ae/64 scope link

valid_lft forever preferred_lft forever

4: eth2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 08:00:27:45:47:54 brd ff:ff:ff:ff:ff:ff

inet 172.16.0.10/24 brd 172.16.0.255 scope global eth2

valid_lft forever preferred_lft forever

inet6 fe80::a00:27ff:fe45:4754/64 scope link

valid_lft forever preferred_lft forever

5: tun0: <POINTOPOINT,MULTICAST,NOARP,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UNKNOWN group default qlen 100

link/none

inet 10.8.0.1/24 brd 10.8.0.255 scope global tun0

valid_lft forever preferred_lft forever

inet6 fe80::71ba:b44:d082:cbf4/64 scope link stable-privacy

valid_lft forever preferred_lft forever

vagrant@openvpn-1:~$ ip route

default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15

10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

10.8.0.0/24 dev tun0 proto kernel scope link src 10.8.0.1

172.16.0.0/24 dev eth2 proto kernel scope link src 172.16.0.10

192.168.200.0/24 dev eth1 proto kernel scope link src 192.168.200.10

Starting up OpenVPN as a Service

We will set OpenVPN to run as a service on the openvpn Virtual Machine.

vagrant@openvpn-1:~$ sudo systemctl start openvpn-server@server.service

vagrant@openvpn-1:~$ sudo systemctl enable openvpn-server@server.service

vagrant@openvpn-1:~$ sudo systemctl status openvpn-server@server.service

● openvpn-server@server.service - OpenVPN service for server

Loaded: loaded (/lib/systemd/system/openvpn-server@.service; enabled; vendor preset: enabled)

Active: active (running) since Sat 2021-03-13 21:47:49 UTC; 7min ago

Docs: man:openvpn(8)

https://community.openvpn.net/openvpn/wiki/Openvpn24ManPage

https://community.openvpn.net/openvpn/wiki/HOWTO

Main PID: 43562 (openvpn)

Status: "Initialization Sequence Completed"

Tasks: 1 (limit: 2281)

Memory: 1.2M

CGroup: /system.slice/system-openvpn\x2dserver.slice/openvpn-server@server.service

└─43562 /usr/sbin/openvpn --status /run/openvpn-server/status-server.log --status-version 2 --suppress-timestamps --config server.conf

Mar 13 21:47:49 openvpn-1 openvpn[43562]: Could not determine IPv4/IPv6 protocol. Using AF_INET

Mar 13 21:47:49 openvpn-1 openvpn[43562]: Socket Buffers: R=[212992->212992] S=[212992->212992]

Mar 13 21:47:49 openvpn-1 openvpn[43562]: UDPv4 link local (bound): [AF_INET]192.168.200.10:1194

Mar 13 21:47:49 openvpn-1 openvpn[43562]: UDPv4 link remote: [AF_UNSPEC]

Mar 13 21:47:49 openvpn-1 openvpn[43562]: GID set to nogroup

Mar 13 21:47:49 openvpn-1 openvpn[43562]: UID set to nobody

Mar 13 21:47:49 openvpn-1 openvpn[43562]: MULTI: multi_init called, r=256 v=256

Mar 13 21:47:49 openvpn-1 openvpn[43562]: IFCONFIG POOL: base=10.8.0.2 size=252, ipv6=0

Mar 13 21:47:49 openvpn-1 openvpn[43562]: IFCONFIG POOL LIST

Mar 13 21:47:49 openvpn-1 openvpn[43562]: Initialization Sequence Completed

Setting up the Client Machine

Once the file has been copied we can install the OpenVPN client software.

vagrant@client-1:~$ sudo apt install openvpn

sudo cp client.ovpn /etc/openvpn/client.conf

sudo openvpn --client --config /etc/openvpn/client.conf

vagrant@client-1:~$ ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 08:00:27:14:86:db brd ff:ff:ff:ff:ff:ff

inet 10.0.2.15/24 brd 10.0.2.255 scope global dynamic eth0

valid_lft 76001sec preferred_lft 76001sec

inet6 fe80::a00:27ff:fe14:86db/64 scope link

valid_lft forever preferred_lft forever

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 08:00:27:f0:b6:2a brd ff:ff:ff:ff:ff:ff

inet 192.168.200.11/24 brd 192.168.200.255 scope global eth1

valid_lft forever preferred_lft forever

inet6 fe80::a00:27ff:fef0:b62a/64 scope link

valid_lft forever preferred_lft forever

5: tun0: <POINTOPOINT,MULTICAST,NOARP,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UNKNOWN group default qlen 100

link/none

inet 10.8.0.2/24 brd 10.8.0.255 scope global tun0

valid_lft forever preferred_lft forever

inet6 fe80::eb83:64ee:5f42:c433/64 scope link stable-privacy

valid_lft forever preferred_lft forever

In our particular lab, because of the way Vagrant uses eth0 as it's default gateway we must make a small change to reach the server physical address of 192.168.200.10 via eth1 rather then eth0. We must also remove the previous static route to reach the web server via the physical interface as we need it to go via the tunnel.

vagrant@client-1:~$ sudo ip route del 192.168.200.10 via 10.0.2.2 dev eth0

vagrant@client-1:~$ sudo ip route del 172.16.0.0/24 via 192.168.200.10 dev eth1

vagrant@client-1:~$ tracepath -n 192.168.200.10

1?: [LOCALHOST] pmtu 1500

1: 192.168.200.10 0.809ms reached

1: 192.168.200.10 0.675ms reached

Resume: pmtu 1500 hops 1 back 1

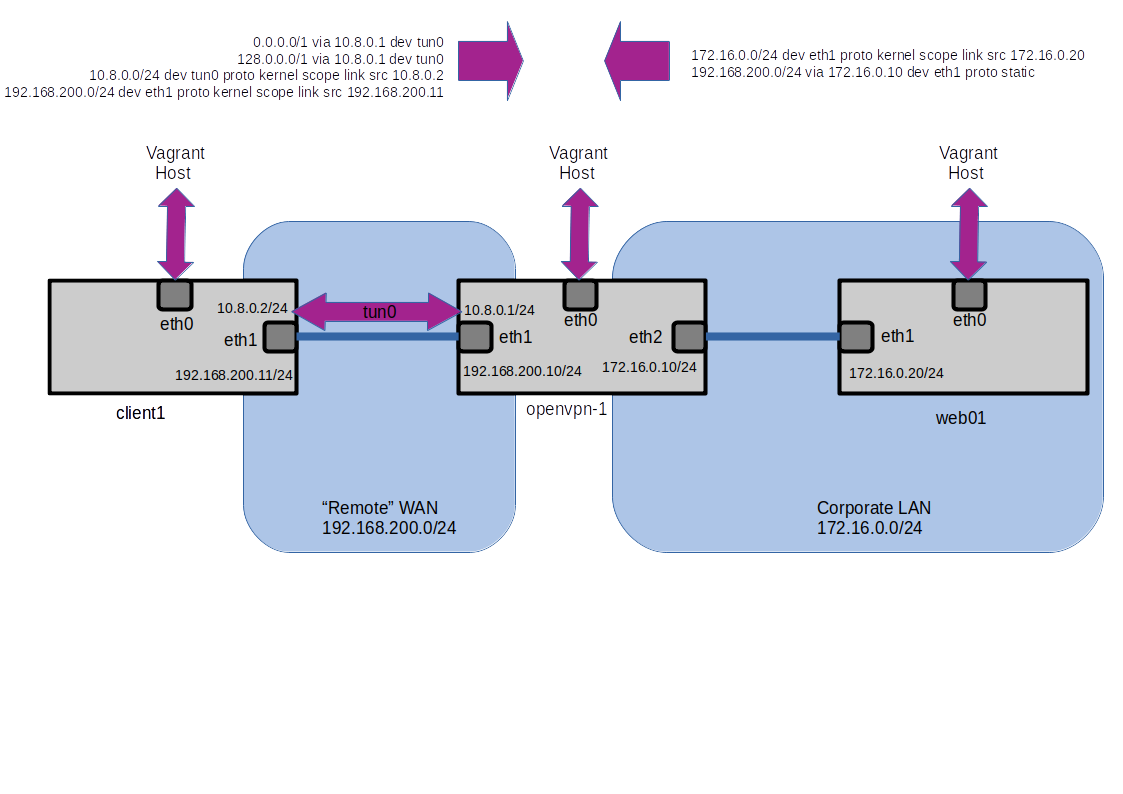

vagrant@client-1:~$ ip route

0.0.0.0/1 via 10.8.0.1 dev tun0

default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15

10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

10.8.0.0/24 dev tun0 proto kernel scope link src 10.8.0.2

128.0.0.0/1 via 10.8.0.1 dev tun0

172.16.0.0/24 via 192.168.200.10 dev eth1 proto static

192.168.200.0/24 dev eth1 proto kernel scope link src 192.168.200.11

The client has set two new routes to send all traffic out the tunnel interface. These are the routes at 0.0.0.0/1 via 10.8.0.1 and 128.0.0.0/1 via 10.8.0.1.

Once this is done the traffic between the client and openVPN server is routed via the tun0 intreface rather then eth1 (although tun0 makes use of the link). All traffic is encrypted on port 1194.

vagrant@openvpn-1:~$ sudo tcpdump -ni eth1

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on eth1, link-type EN10MB (Ethernet), capture size 262144 bytes

22:24:10.968408 IP 192.168.200.11.45357 > 192.168.200.10.1194: UDP, length 252

22:24:10.969500 IP 192.168.200.10.1194 > 192.168.200.11.45357: UDP, length 252

22:24:11.969848 IP 192.168.200.11.45357 > 192.168.200.10.1194: UDP, length 252

22:24:11.970961 IP 192.168.200.10.1194 > 192.168.200.11.45357: UDP, length 252

22:24:12.971290 IP 192.168.200.11.45357 > 192.168.200.10.1194: UDP, length 252

vagrant@openvpn-1:~$ sudo tcpdump -ni tun0

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on tun0, link-type RAW (Raw IP), capture size 262144 bytes

22:24:30.999390 IP 10.8.0.2 > 172.16.0.20: ICMP echo request, id 9, seq 492, length 208

22:24:31.000122 IP 172.16.0.20 > 10.8.0.2: ICMP echo reply, id 9, seq 492, length 208

22:24:31.999759 IP 10.8.0.2 > 172.16.0.20: ICMP echo request, id 9, seq 493, length 208

22:24:32.000430 IP 172.16.0.20 > 10.8.0.2: ICMP echo reply, id 9, seq 493, length 208

We can see that the ICMP traffic is going over the tun0 link and has had it's source address changed to 10.8.0.2 (which is the address of the openVPN client). We can also see that the traffic going over eth1 is UDP traffic on port 1194.

vagrant@openvpn-1:~$ sudo iptables -t nat -L -n -v

Chain PREROUTING (policy ACCEPT 227 packets, 188K bytes)

pkts bytes target prot opt in out source destination

Chain INPUT (policy ACCEPT 146 packets, 145K bytes)

pkts bytes target prot opt in out source destination

Chain OUTPUT (policy ACCEPT 9 packets, 726 bytes)

pkts bytes target prot opt in out source destination

Chain POSTROUTING (policy ACCEPT 19 packets, 10350 bytes)

pkts bytes target prot opt in out source destination

3 1788 SNAT all -- * * 10.8.0.0/24 !10.8.0.0/24 to:192.168.200.10

Conclusions

This Lab has shown how to get a basic OpenVPN setup using Virtualbox and Vagrant.

It has highlighted the importance of host routing within the Virtual Machines to ensure that traffic is sent out the correct interface, particularly when there are multiple interfaces involved. The use of Vagrant allows the easy creation of Virtual Machines but as it creates a management/configuration interface on eth0 , which also serves as a default gateway it meant further routing modifications had to be made to ensure traffic didn't get routed via the Host.

OpenVPN also creates routes and performs NAT to overwrite the source address of the client that is used to reach the final remote network. The use of 0.0.0.0/1 and 128.0.0.0/1 routes matches all traffic coming out of the client and sends it through the tunnel but means that the normal default route doesn't need to be overwritten.

TCPdump was used to confirm that the traffic going over the underlay network was not ICMP but encrypted traffic using the standard port 1194.

When the traffic was inspected over the tunnel it was shown to be ICMP that used the tunnel source IP address of 10.8.0.2.

While the Lab is not a realistic scenario it shows the use of routing table inspection and using TCPdump to verify the VPN is working.