Services are means of providing connectivity to PODs that by their nature may be deleted and re-created with different IP addresses within the cluster. This means that inter-POD connectivity cannot rely on using the IP address of the PODs themselves.

There are a number of different services that may be used and we'll take a look at two different ones in this post.

- ClusterIP Service

- NodePort Service

The purpose of these two objects is slightly different with the ClusterIP Service being used to allow internal connectivity and the NodePort being able to allow external access.

Setting up Cluster IP Service

Our first deployment will run a Pod that displays it's hostname and IP address. This is a simple application that is useful for confirming which container is actually servicing an HTTP request.

We'll create a POD using the following manifest file.

apiVersion: apps/v1

kind: Deployment

metadata:

name: jason-deployment

labels:

app: jason-app

namespace: default

spec:

replicas: 3

selector:

matchLabels:

app: jason-app

template:

metadata:

labels:

app: jason-app

spec:

containers:

- name: jason-pod

image: salterje/flask_test:0.10

ports:

- containerPort: 5000

The image that we'll use is a flask container that will listen on port 5000 and display a simple webpage with the hostname and IP details of the container.

The POD will be run by applying the YAML file and we'll bring up 3 replicas for the timebeing.

kubectl apply -f jason-deployment.yaml

This can be checked.

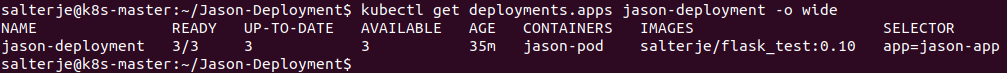

kubectl get deployments.apps jason-deployment -o wide

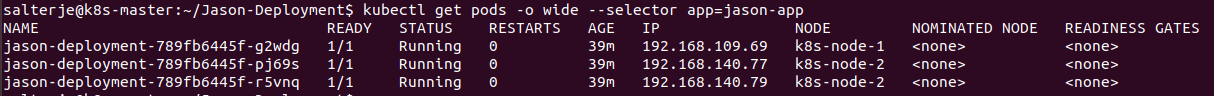

We can see that we now have 3 replicas running within the cluster and now we need to find the IP addresses and which Node they are running on. We'll actually filter the output of the command with the selector that we used to build the deployment so as to make the display a little more manageable.

kubectl get pods -o wide --selector app=jason-app

As a quick final test a curl command can be run on one of the Nodes. This needs to be done on port 5000 when connecting directly onto the container itself.

salterje@k8s-node-1:~$ curl 192.168.109.69:5000

<!DOCTYPE html>

<html lang="en" dir="ltr">

<head>

<meta charset="utf-8">

<title>This is my App</title>

<link rel="stylesheet" href="/static/css/main.css"

</head>

<body>

<h1 class="logo">Welcome to Jason's Test Site V0.10 </h1>

<p>Hostname: jason-deployment-789fb6445f-g2wdg</p>

<p>IP Address: 192.168.109.69</p>

<p>OS Type: #113-Ubuntu SMP Thu Jul 9 23:41:39 UTC 2020 </p>

<br>

<p>Current Time & Date: Mon Jul 27 16:11:15 2020 </p>

<br>

<p> This is a table of environmental values: </p>

<table>

<tr>

<th>KEY</th>

<th>VALUE</th>

</tr>

<td> KEY1 </td>

<td> None </td>

</tr>

</tr>

<td> KEY2 </td>

<td> None </td>

</tr>

</table>

</body>

The hostname and IP address displayed correspond to those shown when getting the details of the POD. If we were to delete the POD then another one would be re-created by the ReplicaSet which would also have a different IP address and Hostname (as well as possibly being deployed onto another Node).

This would obviously give real problems with inter-POD communications as there would be no guarantee about what IP address or Hostname would be assigned.

This is where the concept of a Cluster Service comes in which is actually constant and will automatically route traffic to any PODs associated with it.

Creating a Cluster IP Service

We'll now create a service associated with our deployment.

apiVersion: v1

kind: Service

metadata:

name: jason-service

namespace: default

spec:

selector:

app: jason-app

ports:

- protocol: TCP

port: 80

targetPort: 5000

This service is linked to the PODs that have a label of app: jason-app by something called a selector (which is actually the same way a Deployment selects the PODs that it will be associated with).

The service YAML also defines the protocol and port that it will listen on (in this case TCP with a port of 80). The targetPort is the port that is defined within the linked PODs.

We can bring the service up in the normal way.

kubectl apply -f jason-service.yaml

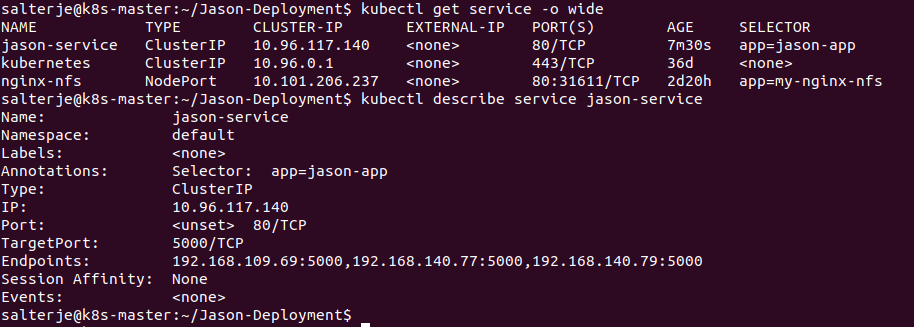

We can confirm the creation of the service and the links to the PODs.

kubectl get service -o wide

kubectl describe service jason-service

When describing the service we can also confirm the endpoints of the service which are all the PODs that it connects to.This means that the IP address and port number for the service will be load balanced over the 3 PODs that form the Deployment.

The Cluster IP Address will remain constant over the entire cluster meaning that any internal communication for the associated PODs can make use of it.

As PODs come and go the EndPoints will be adjusted accordingly giving a permanent address to route traffic that is needed for the PODs.

We can prove that by running a curl command that will actually be routed to the associated PODs (proven by confirming the Hostname and IP addresses of the served http page varies accordingly). This also allows the TCP port to be mapped accordingly.

## First curl command goes to one POD

salterje@k8s-node-2:~$ curl 10.96.117.140

<!DOCTYPE html>

<html lang="en" dir="ltr">

<head>

<meta charset="utf-8">

<title>This is my App</title>

<link rel="stylesheet" href="/static/css/main.css"

</head>

<body>

<h1 class="logo">Welcome to Jason's Test Site V0.10 </h1>

<p>Hostname: jason-deployment-789fb6445f-r5vnq</p>

<p>IP Address: 192.168.140.79</p>

<p>OS Type: #113-Ubuntu SMP Thu Jul 9 23:41:39 UTC 2020 </p>

<br>

<p>Current Time & Date: Mon Jul 27 16:33:30 2020 </p>

<br>

<p> This is a table of environmental values: </p>

<table>

<tr>

<th>KEY</th>

<th>VALUE</th>

</tr>

<td> KEY1 </td>

<td> None </td>

</tr>

</tr>

<td> KEY2 </td>

<td> None </td>

</tr>

</table>

</body>

## Second curl command goes to the other POD

salterje@k8s-node-2:~$ curl 10.96.117.140

<!DOCTYPE html>

<html lang="en" dir="ltr">

<head>

<meta charset="utf-8">

<title>This is my App</title>

<link rel="stylesheet" href="/static/css/main.css"

</head>

<body>

<h1 class="logo">Welcome to Jason's Test Site V0.10 </h1>

<p>Hostname: jason-deployment-789fb6445f-pj69s</p>

<p>IP Address: 192.168.140.77</p>

<p>OS Type: #113-Ubuntu SMP Thu Jul 9 23:41:39 UTC 2020 </p>

<br>

<p>Current Time & Date: Mon Jul 27 16:42:30 2020 </p>

<br>

<p> This is a table of environmental values: </p>

<table>

<tr>

<th>KEY</th>

<th>VALUE</th>

</tr>

<td> KEY1 </td>

<td> None </td>

</tr>

</tr>

<td> KEY2 </td>

<td> None </td>

</tr>

</table>

</body>

This gives us a method of achieving reliable inter-POD communication within the cluster but doesn't actually allow external communication.

There are various ways of doing this but for this Post a NodePort Service will be used.

NodePort

A Nodeport is a service that allows connection to PODs running within the cluster from outside. It creates a port mapping on each of the Nodes that will be forwarded to the associated POD endpoints.

The Nodeport will assign a port on each member Node within the cluster on a range of 30000-32767. This port will act as a proxy to the service that will have end points to all the associated PODs. By creating a session to any of the outside interfaces on the cluster using this port it is possible to connect to internal PODs from outside.

We can create a NodePort by deploying a suitable YAML file.

apiVersion: v1

kind: Service

metadata:

labels:

app: jason-app

name: jason-deployment

namespace: default

spec:

externalTrafficPolicy: Cluster

ports:

- nodePort: 30608

port: 5000

protocol: TCP

targetPort: 5000

selector:

app: jason-app

sessionAffinity: None

type: NodePort

The NodePort is setup by simply applying the YAML file.

kubectl apply -f jason-nodeport.yaml

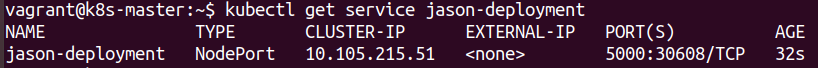

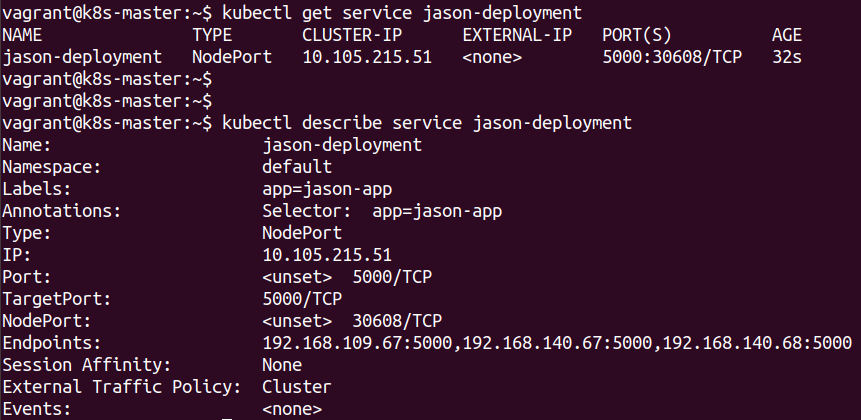

We can also confirm the endpoints connect to our running PODs.

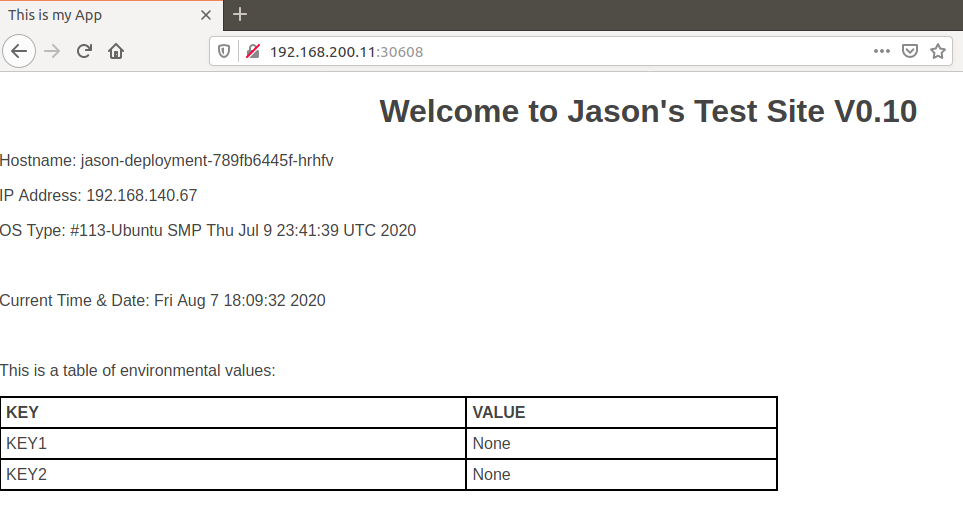

This time we will perform a test from outside the cluster by using the Nodeport that will forward the external connection to our running PODs.

It can be seen that the PODs are now reachable from outside the cluster.

They can actually be reached from the external interfaces of any of the Nodes by using the correct Port (in this case 30608). Each session connection will be served by one of the running PODs as the Nodeport Service will load balance between them.

Conclusions

This post is a simple introduction to how Kubernetes services cope with the creation and deletion of running PODs. A service within the cluster provides a stable IP address that allows PODs to be able to reach other PODs and for external connections to be made.

The actual functionality of services is actually done by the Kube-Proxy component that runs on each Node. This is responsible for ensuring the connectivity.

The Nodeport expands this functionality to allow external connections to be made via exposed ports. This will allow connections to be made from any of the Nodes by using that particular port.