Updating Images within Deployments

One of the drivers for any kind of containerised system is the ability to have all dependencies self-contained within an image. This image can then be used to create running instances of the code with the confidence that it should run in the same way on any platform.

The image that will be run as part of the deployment is defined within the YAML manifest and this post will show various ways that the running PODs can be updated.

Creation of Base Deployment

We'll again start with a basic NGINX deployment which will be created in the normal way.

kubectl create -f test-nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: test-nginx

name: test-nginx

namespace: default

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app: test-nginx

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

labels:

app: test-nginx

spec:

containers:

- image: nginx:latest

imagePullPolicy: Always

name: nginx

Note

The line within the container spec imagePullPolicy: Always dictates that the image will always be pulled from Docker Hub to ensure it is up to date.

The other area of interest for the updating of the POD images is the update strategy. By default Kubernetes will adopt a rolling update which means that it will roll out the update to a number of PODs, delete the old ones and then move on. This means that there will always be a number of PODs running within the cluster which maintains the service.

The alternative to this setting is to have a Recreate strategy which will take down all replicas within the Deployment and update them all at once. This ensures that there is a common version of code running but will cause more disruption.

Verify Image Version within POD

There are various ways of confirming the image version running within the POD, without having to actually connect to the container directly (although this could be a possibility).

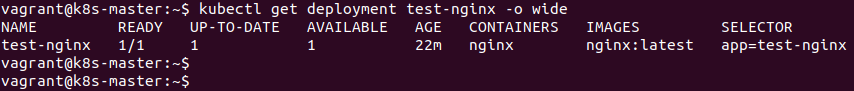

One of the simplest ways to check the running image is to add -o wide when getting the deployment details.

kubectl get deployment test-nginx -o wide

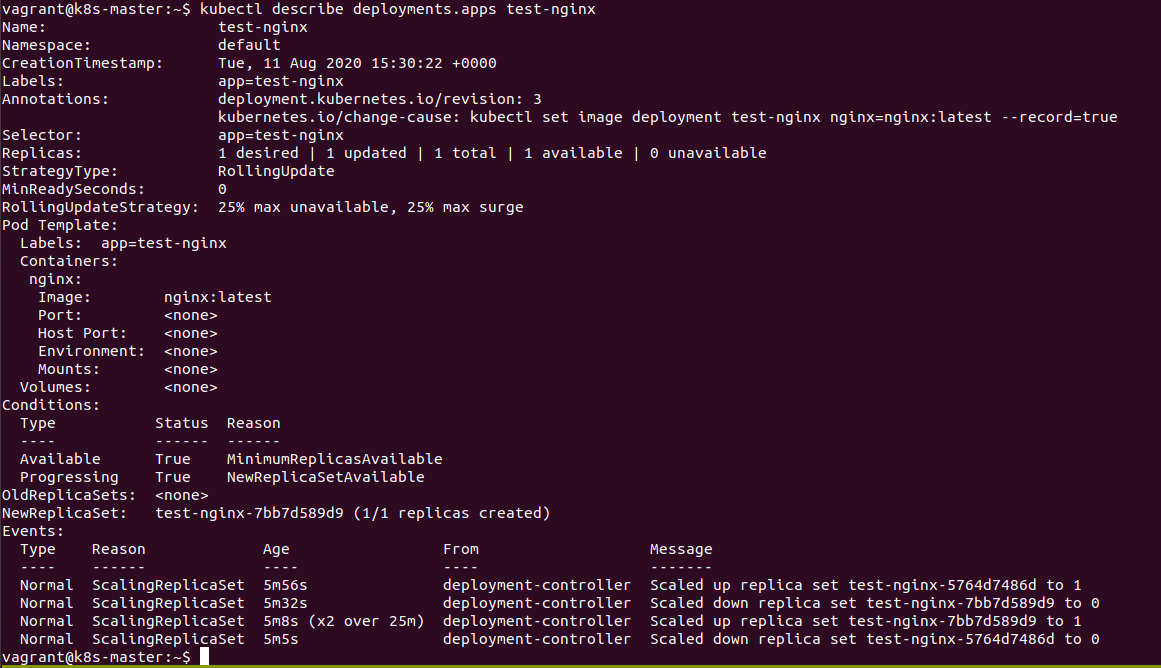

We can also get more information about the Deployment, including the image version, by describing it.

kubectl describe deployment test-nginx

It can be seen that the image used within the container is set to nginx:latest

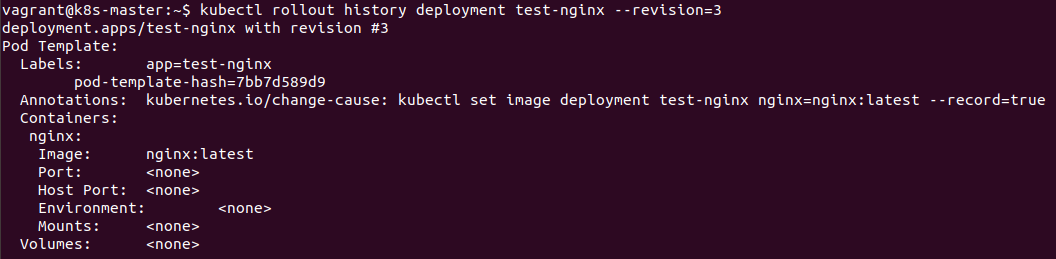

We can also look at the history of the deployment and in particular the revision that is currently running.

kubectl rollout history deployment test-nginx --revision=3

The disadvantage of looking at the deployment history is that it can contain other deployments within it's history.

Updating the Image within the Deployment

The updating of an image can be done in a number of ways, in a similar manner to when the number of replicas is increased or decreased.

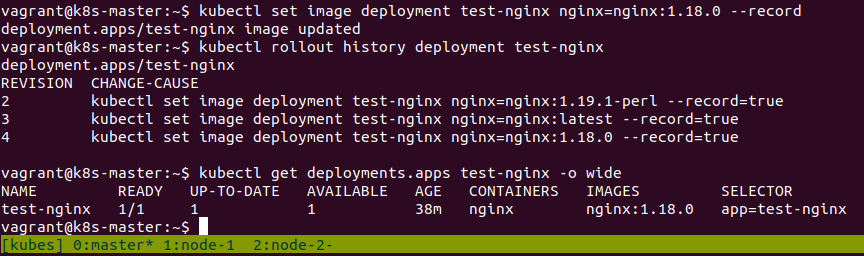

kubectl set image deployment test-nginx nginx=nginx:1.18.0 --record

This command will change the image within the deployment to an older one (in this case 1.18.0) and cause a rolling update of all the PODs back to this image. We have also used --record to ensure that the change is recorded in the rollout history of the deployment.

We can confirm the roll-back with the same commands we used previously:

kubectl rollout history deployment test-nginx

kubectl get deployment test-nginx -o wide

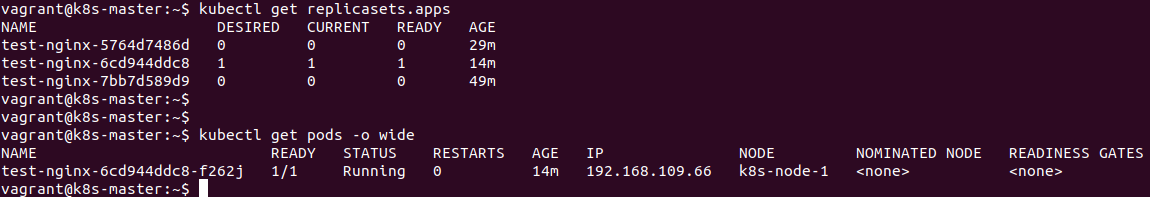

Manipulations of the ReplicaSet for the Deployment

The interesting thing that is happening, behind the scenes, is that the Deployment is in fact building different versions of the ReplicaSets that are used to ensure the correct number of PODs are deployed.

This can be confirmed by observing the number of ReplicaSets and also by closer inspection of the naming convention for both the PODs and the ReplicaSets. Careful inspection shows that these are actually being re-named as PODs are created and deleted.

kubectl get replicasets

It can be seen the naming convention of the PODs is directly related to the running ReplicaSet.

Kubernetes has actually kept track of the previous ReplicaSets. This presents an easy way to rollback the image which means that a previous ReplicaSet is run to restore the previous image version.

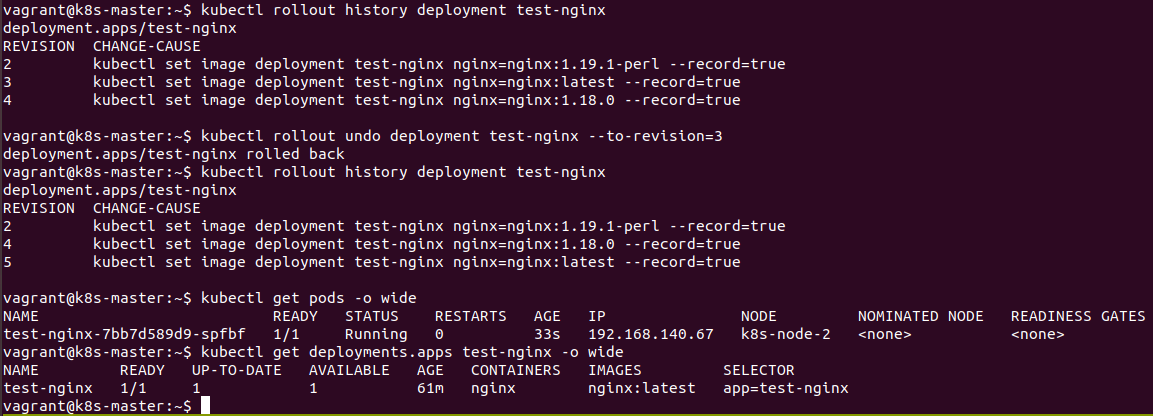

Rolling Back to a Previous Version

There are a number of ways that we can roll-back to our latest version of NGINX. All the previous methods will work as well as actually updating the YAML manifest file (as normal this is probably the best way of performing updates as the file itself allows full documentation of exactly how we want the Deployment to be setup).

For the purposes of our testing we will use the rollout command to perform the rollback.

kubectl rollout undo deployment test-nginx --to-revision=3

It can be seen that once again we are running the latest version of NGINX and we can see that the latest revision in the rollout history has been updated accordingly. The running POD has had it's name changed to reflect the rollback to the previous ReplicaSet (which is actually the real mechanism for the rollback).

The previous revision (3 in this case) has also been deleted and re-created at the bottom of the list

By using the --to-revision option as part of the rollout command it is possible to change between any of the revisions within the history.

Note

The actual number of revisions that can be stored is actually contained within the YAML manifest file under revisionHistoryLimit: 10 within the spec section.

Conclusions

There are a number of ways of defining the image that will be used to create the containers within the PODs running within a Deployment. It is possible to make quick changes by various kubectl commands being run or by updating the actual YAML manifest file.

The mechanism used for the process is actually the ReplicaSets that are automatically generated by the Deployment object. As well as defining the number of Replicas running they will also dictate the image that will be used to create the running containers.

Obviously as versions are updated or changed the associated containers within the PODs are deleted and created. The connection to these endpoints is done by the associated services that connect to these PODs which will remain constant within the cluster.