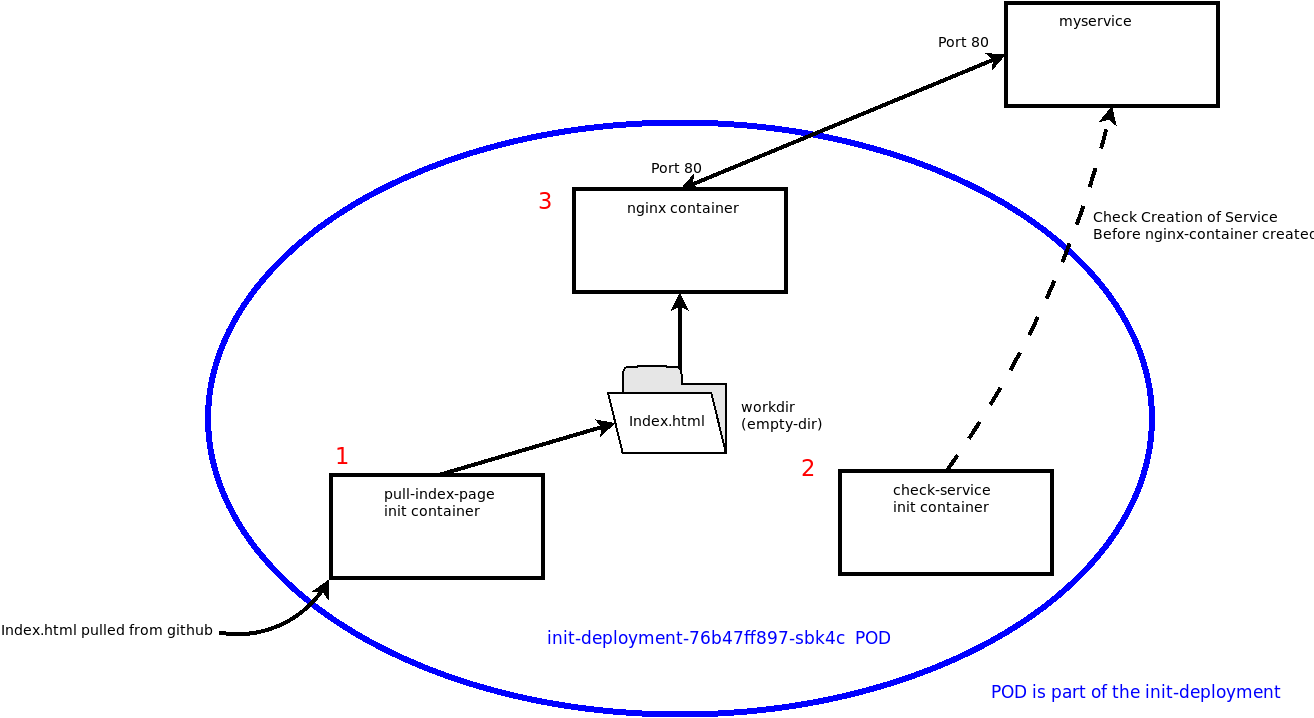

PODs within Kubernetes often have just a single container within them but it is perfectly possible to have multiple containers within the same POD. All the containers within the POD will share the same IP address and can also share a volume to exchange data.

Init containers are containers that are started and executed before the other containers within the POD. Init containers always run to completion and each must complete before the next one starts. The other containers within the POD will not be started until after the Init containers have completed.

In this post we will create a deployment to create an NGINX container that shares a POD with two init containers. These will ensure that the following conditions are met:

-

The first Init container will pull down an index page from a remote github repo with a suitable test page. A shared volume will be created using an EmptyDir volume that will be used to allow the NGINX container to mount the index page.

-

The second Init container will wait until a service called myservice has been created which will expose the deployment. This is done by running a simple script in the container that will perform an nslookup looking for a DNS entry for myservice which will continue until the service is created.

In this way the NGINX container will not be created until these pre-conditions are ready.

All the YAML files can be found at https://github.com/salterje/initContainers.git . The manifest files presume a test namespace which should be created or the YAML files modified before use.

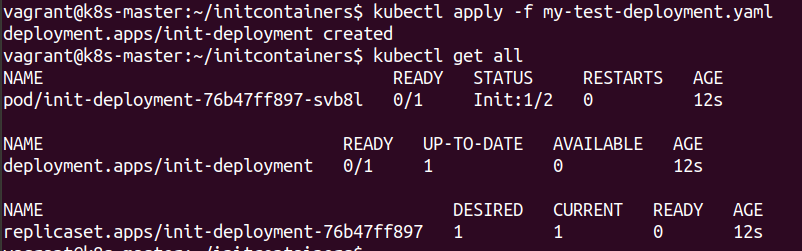

We can run the manifest in the normal way

kubectl apply -f my-test-deployment.yaml

However something interesting happens when we view all the objects in the namespace.

The system has not created the necessary containers and the clue is in the section that says Init:1/2 which is informing us that only one of the Init containers has completed.

We can get more information on what is happening by running:

kubectl describe pod init-deployment-76b47ff897-svb8l

The section under init-containers shows the status of both Init containers:

The first Init container called pull-index-page has completed it's commands which was a simple wget to grab the test index page that will be served by NGINX. It can be seen in the state that it has terminated due to completing it's commands and the exit code is 0.

Init Containers:

pull-index-page:

Container ID: docker://51d74db9585d41f11e872b98ef788ac5d02f5512c05f779fd03b28d6dfbe9653

Image: busybox

Image ID: docker-pullable://busybox@sha256:d366a4665ab44f0648d7a00ae3fae139d55e32f9712c67accd604bb55df9d05a

Port: <none>

Host Port: <none>

Command:

wget

-O

/work-dir/index.html

https://raw.githubusercontent.com/salterje/testindexpage/master/index.html

State: Terminated

Reason: Completed

Exit Code: 0

Started: Thu, 24 Sep 2020 19:19:58 +0000

Finished: Thu, 24 Sep 2020 19:19:58 +0000

Ready: True

Restart Count: 0

Limits:

cpu: 500m

memory: 512Mi

Requests:

cpu: 100m

memory: 256Mi

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-dv297 (ro)

/work-dir from workdir (rw)

The second Init container which checks for the presence of the myservice object hasn't completed and is still running. This means that the NGINX container within the POD cannot be created yet.

check-service:

Container ID: docker://26486a0fb8bcb0f50dacd79960d8d922d6c25e0fbc4e2f15a76c1ce324c98c2e

Image: busybox:1.28

Image ID: docker-pullable://busybox@sha256:141c253bc4c3fd0a201d32dc1f493bcf3fff003b6df416dea4f41046e0f37d47

Port: <none>

Host Port: <none>

Command:

sh

-c

until nslookup myservice.$(cat /var/run/secrets/kubernetes.io/serviceaccount/namespace).svc.cluster.local; do echo waiting for myservice; sleep 2; done

State: Running

Started: Thu, 24 Sep 2020 19:20:00 +0000

Ready: False

Restart Count: 0

Limits:

cpu: 500m

memory: 512Mi

Requests:

cpu: 100m

memory: 256Mi

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-dv297 (ro)

This is confirmed in the following section of the POD description.

Containers:

nginx:

Container ID:

Image: nginx

Image ID:

Port: 80/TCP

Host Port: 0/TCP

State: Waiting

Reason: PodInitializing

Ready: False

Restart Count: 0

Limits:

cpu: 500m

memory: 512Mi

Requests:

cpu: 100m

memory: 256Mi

Environment: <none>

Mounts:

/usr/share/nginx/html from workdir (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-dv297 (ro)

Conditions:

Type Status

Initialized False

Ready False

ContainersReady False

PodScheduled True

It can be seen that the main container is stuck in an initializing state.

The reason that the 2nd Init container hasn't completed is the lack of the myservice object that we haven't created yet. This is easy to fix by running the following manifest to create the service which will connect the NGINX container using suitable selectors linked to the labels used to create the POD.

apiVersion: v1

kind: Service

metadata:

name: myservice

namespace: test

spec:

selector:

app: init-deployment

ports:

- protocol: TCP

port: 80

targetPort: 80

kubectl apply -f myservice.yaml

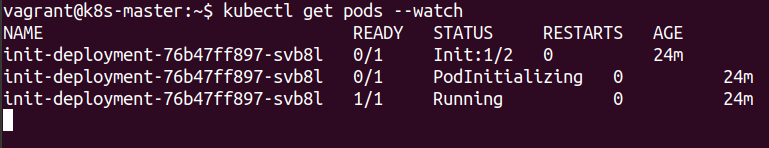

We can observe the PODs being created

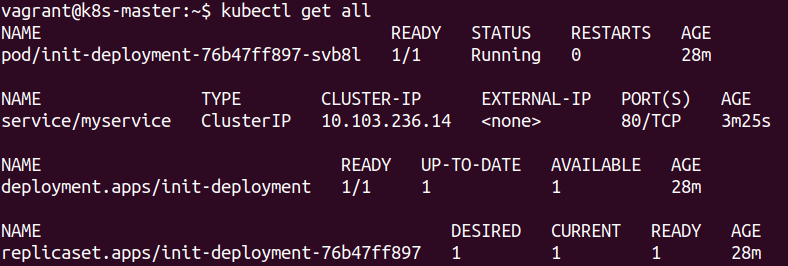

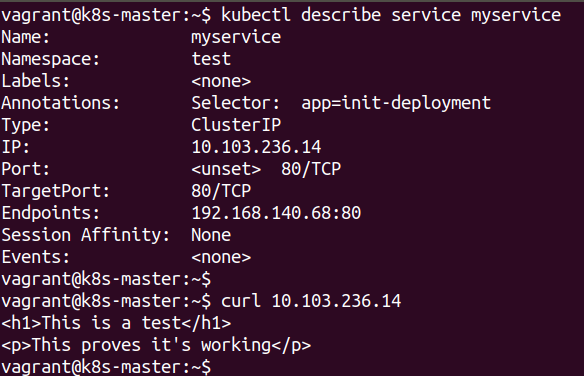

We can confirm that everything has been created, including the myservice object, that will allow connection to the POD.

We can now connect to the NGINX container using the ClusterIP address of the myservice service.

Of course we have only created an internal service, meaning the page is not viewable from outside the cluster.

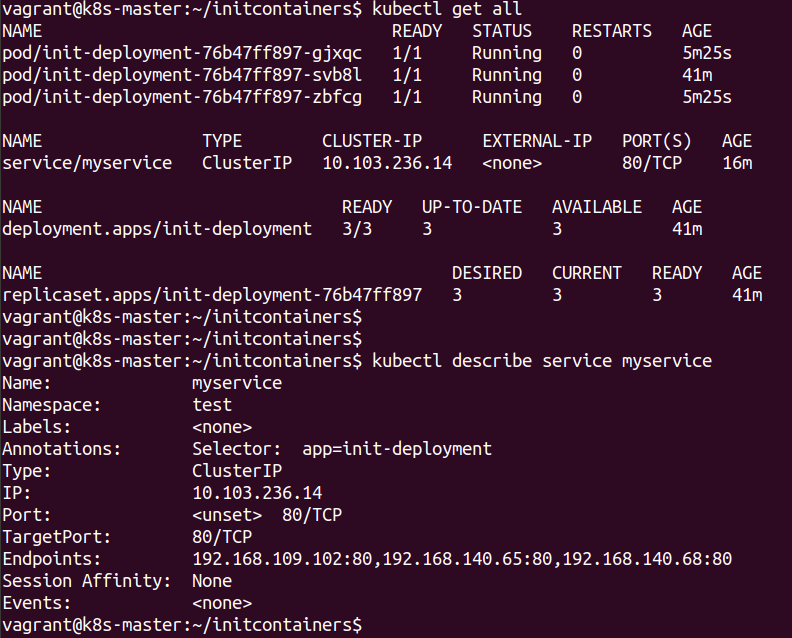

Scaling the Deployment

As we have created a Deployment it is easy to scale the number of PODs up. This creates more PODs, each of which will also work in a similar way with their own Init containers. The myservice object will create end-points to all of the NGINX containers within the PODs, allowing load balancing and of course Kubernetes deploys the PODs over all the worker nodes within the cluster.

kubectl scale deployment init-deployment --replicas=3 --record

Conclusions

This Lab has shown how a POD can have multiple containers within it, all sharing the same IP address space and being able to make use of a shared Volume. The use of Init containers allows preparation of the environment, in our case some content was pulled down from an external github repo and the running of the NGINX container was held back until the relevant service was running. Other examples of this sort of functionality could be waiting for Databases to be ready or other similar tasks.

We also made use of a shared volume to share data within the POD, this allowed the Init container to pull the information and send it onto the NGINX container.

The Init containers must be fully terminated before the main container could be run, meaning the application will always have it's correct content loaded and have a suitable service ready for connection to other PODs within the cluster.